“This article explains artificial neural networks in a clear, technical context, examining their structure, optimization, and evolution within machine learning and artificial intelligence.”

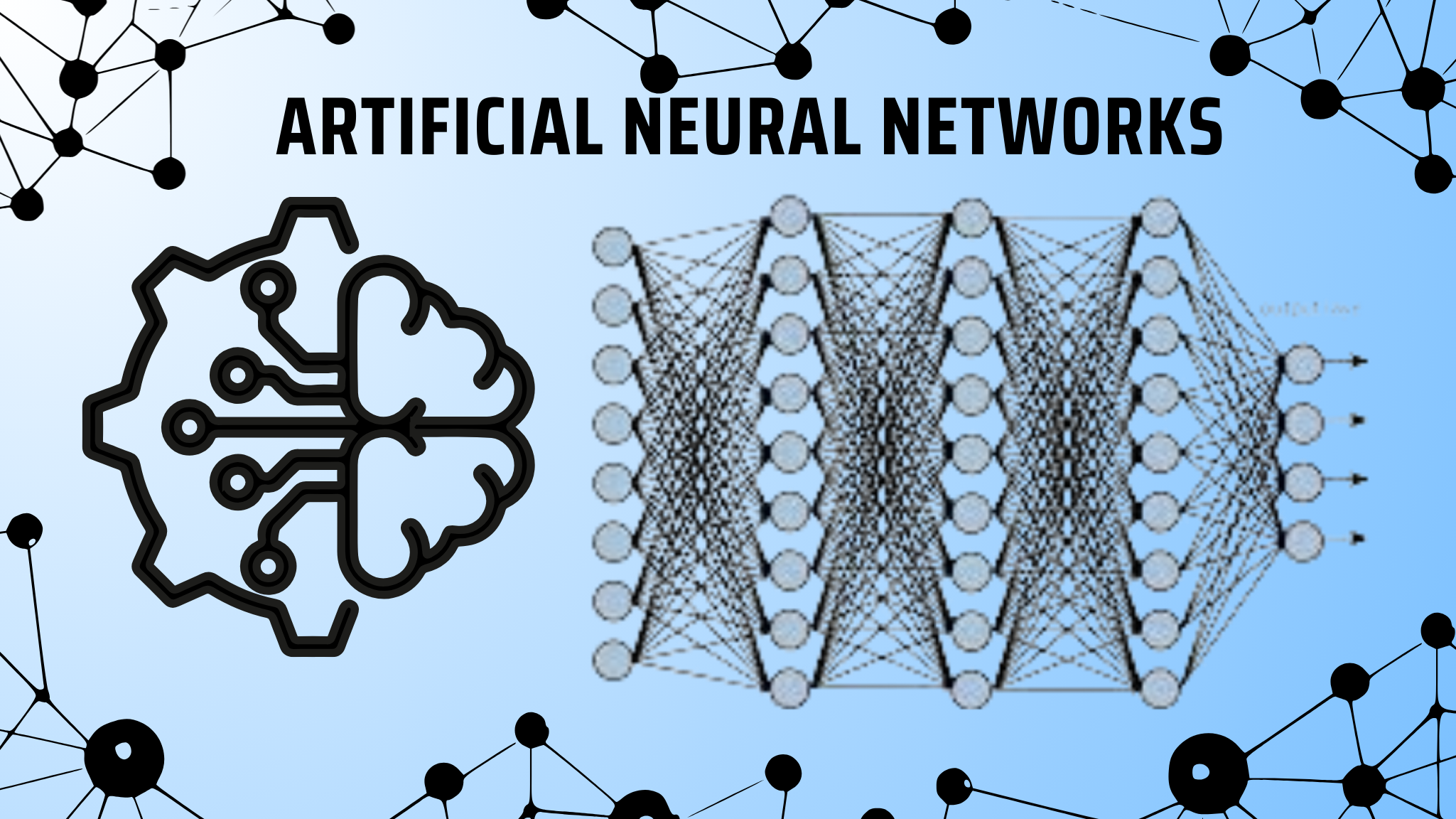

Artificial Neural Networks Explained: Architecture, Training, and Historical Evolution

Artificial neural networks have become one of the most influential computational models in modern artificial intelligence. From image classification systems to adaptive control mechanisms, these models are now deeply embedded in contemporary machine learning solutions. Often abbreviated as ANN, an artificial neural network is inspired by biological neural networks and designed to process information through interconnected artificial neurons. This article presents a comprehensive professional overview of artificial neural networks, covering their origins, theoretical foundations, architecture, training methodology, optimization techniques, and real-world applications.

Foundations of Artificial Neural Networks

An artificial neural network is a computational framework designed to approximate complex functions through layered transformations of data. The fundamental concept behind ANN is drawn from the structure and behavior of biological neural networks found in the human brain. Neurons in biological systems transmit signals through synapses, adapting over time based on experience. Similarly, artificial neurons process numerical inputs, apply transformations, and pass results forward through a neural net.

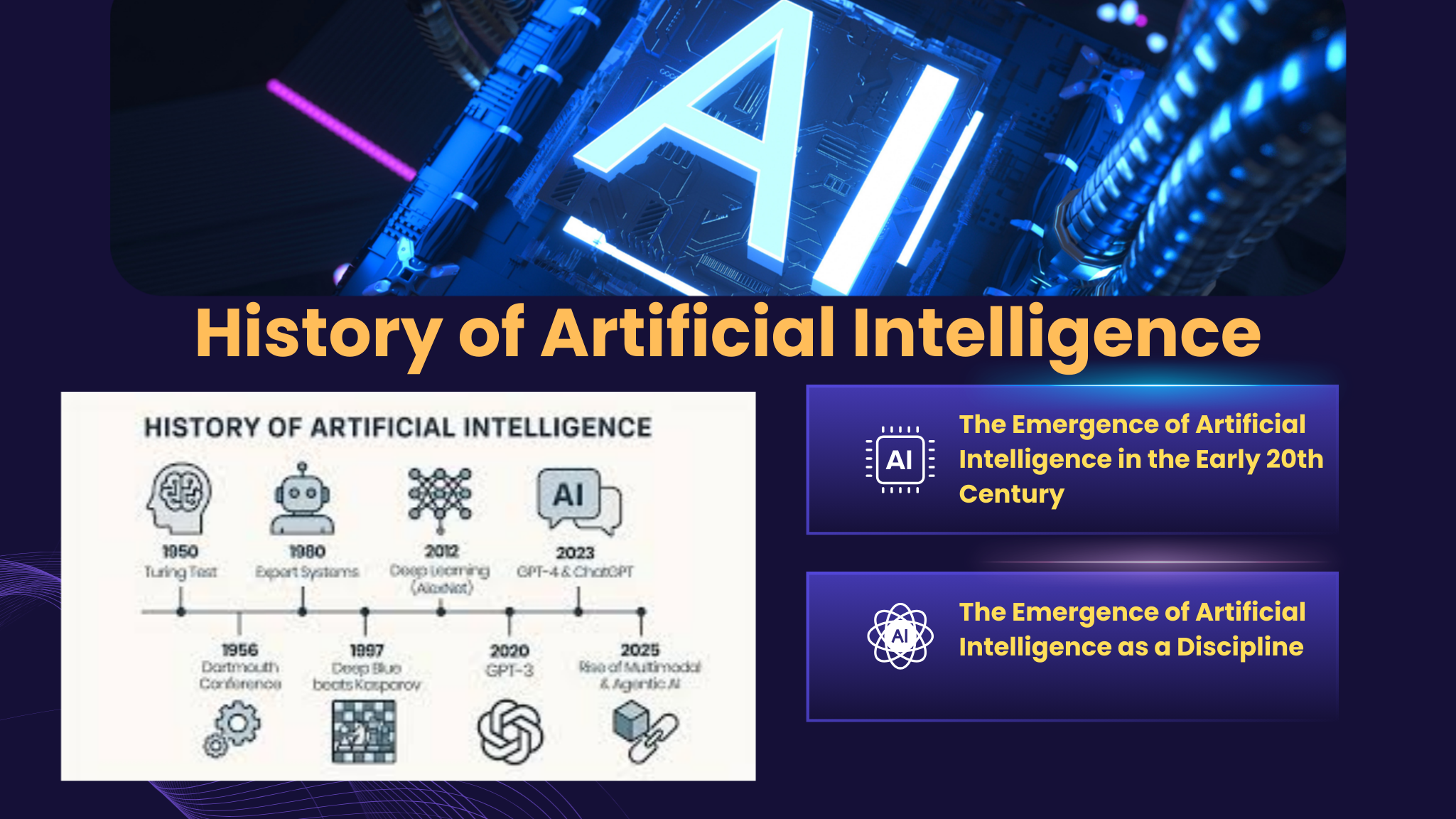

Early research into neural networks was heavily influenced by neuroscience and mathematics. The idea of modeling cognition using computational units dates back to the 1940s when Warren McCulloch and Walter Pitts introduced a simplified mathematical model of neurons. Their work demonstrated that logical reasoning could be simulated using networks of threshold-based units, laying the groundwork for future neural network architectures.

The perceptron, introduced by Frank Rosenblatt in the late 1950s, represented a major milestone in the history of neural networks. As one of the earliest machine learning algorithms, the perceptron could learn linear decision boundaries from labeled training data. Although limited in representational power, it established the feasibility of neural network training through data-driven learning processes.

Artificial Neural Network as a Computational Model

At its core, an artificial neural network functions as a layered computational model. It maps inputs to outputs by passing data through multiple transformations governed by weights and biases. Each artificial neuron receives signals, computes a weighted sum, applies an activation function, and forwards the result to the next layer.

The basic ANN architecture consists of three primary components: the input layer, hidden layers, and output layer. The input layer serves as the interface between raw data and the network. The output layer produces the final predictions, whether they represent classifications, probabilities, or continuous values.

Between these layers lie one or more hidden layers. Hidden layers are responsible for feature extraction and pattern recognition. By stacking multiple hidden layers, neural networks can learn increasingly abstract representations of data, a property that underpins deep learning and deep neural networks.

Activation Functions and Signal Transformation

Activation functions play a critical role in the behavior of artificial neural networks. Without them, a neural network would collapse into a linear model regardless of depth. By introducing non-linearity, activation functions enable neural nets to approximate complex, non-linear relationships.

Common activation functions include sigmoid, hyperbolic tangent, and the ReLU activation function. ReLU, or Rectified Linear Unit, has become particularly popular in deep learning due to its computational efficiency and reduced risk of vanishing gradients. The choice of activation function significantly impacts learning speed, stability, and overall performance.

Weights, Biases, and Learning Dynamics

Weights and biases define the internal parameters of an artificial neural network. Weights determine the strength of connections between neurons, while biases allow flexibility in shifting activation thresholds. During the learning process, these parameters are adjusted to minimize errors between predicted and actual outputs.

Learning in ANN is fundamentally an optimization problem. The objective is to find a set of weights and biases that minimize a predefined loss function. This loss function quantifies prediction errors and guides the direction of parameter updates.

Neural Network Training and Optimization

Neural network training involves iteratively improving model parameters using labeled training data. The most common training paradigm relies on supervised learning, where each input is paired with a known target output. The network generates predictions, computes errors using a loss function, and updates parameters accordingly.

Empirical risk minimization is the guiding principle behind neural network training. It seeks to minimize the average loss over the training dataset. Gradient-based methods are used to compute how small changes in parameters affect the loss. These gradients provide the information needed to adjust weights in a direction that improves model performance.

Backpropagation is the algorithm that enables efficient computation of gradients in multilayer neural networks. By propagating errors backward from the output layer to earlier layers, backpropagation calculates gradients for all parameters in the network. This method made training deep neural networks feasible and remains central to modern deep learning systems.

Stochastic gradient descent and its variants are widely used for parameter optimization. Rather than computing gradients over the entire dataset, stochastic gradient descent updates parameters using small subsets of data. This approach improves computational efficiency and helps models escape local minima.

Neural Networks in Machine Learning Context

Neural networks in machine learning differ from traditional rule-based systems by learning directly from data. Instead of explicitly programming behavior, engineers define a model structure and allow the learning process to infer relationships from examples. This data-driven approach has proven particularly effective for tasks involving high-dimensional inputs and ambiguous patterns.

Artificial neural networks excel at predictive modeling, where the goal is to estimate future outcomes based on historical data. Applications range from financial forecasting to medical diagnosis and demand prediction. Their adaptability also makes them suitable for adaptive control systems, where models continuously adjust behavior in response to changing environments.

Feedforward Neural Networks and Multilayer Perceptrons

The feedforward neural network is the simplest and most widely studied ANN architecture. In this structure, information flows in one direction from input to output without feedback loops. The multilayer perceptron is a classic example of a feedforward neural network with one or more hidden layers.

Multilayer perceptrons can approximate arbitrary continuous functions given sufficient depth and width. This theoretical property, often referred to as the universal approximation theorem, underscores the expressive power of artificial neural networks.

Despite their simplicity, feedforward networks remain highly relevant. They are commonly used for regression, classification, and pattern recognition tasks where temporal dependencies are minimal.

Deep Neural Networks and Deep Learning

Deep learning refers to the use of deep neural networks containing multiple hidden layers. The depth of these models allows them to learn hierarchical representations of data. Lower layers capture simple features, while higher layers represent complex abstractions.

Deep neural networks have revolutionized fields such as computer vision and natural language processing. Their success is closely tied to advances in computational hardware, large-scale labeled training data, and improved training algorithms.

Convolutional Neural Networks and Feature Extraction

Convolutional neural networks, often abbreviated as CNN, are a specialized class of deep neural networks designed for grid-like data such as images. CNNs incorporate convolutional layers that automatically perform feature extraction by scanning filters across input data.

This architecture significantly reduces the number of parameters compared to fully connected networks while preserving spatial structure. CNNs have become the dominant approach for image classification, object detection, and visual pattern recognition.

Transfer learning is commonly applied with convolutional neural networks. In this approach, a model trained on a large dataset is adapted to a new task with limited data. Transfer learning reduces training time and improves performance in many artificial intelligence applications.

Loss Functions and Model Evaluation

The loss function defines what the neural network is trying to optimize. Different tasks require different loss functions. For classification problems, cross-entropy loss is frequently used, while mean squared error is common in regression tasks.

Choosing an appropriate loss function is critical for stable neural network training. The loss must align with the problem’s objectives and provide meaningful gradients for optimization. Evaluation metrics such as accuracy, precision, recall, and error rates complement loss values by offering task-specific performance insights.

Artificial Neural Networks and Artificial Intelligence

Artificial neural networks form a foundational pillar of artificial intelligence. They enable machines to perform tasks that traditionally required human cognition, such as visual perception, speech recognition, and decision-making. As part of a broader artificial intelligence ecosystem, ANN models often integrate with symbolic reasoning systems, reinforcement learning agents, and probabilistic models.

The relationship between ANN and artificial intelligence is not merely technical but philosophical. Neural networks challenge traditional views of intelligence by demonstrating that complex behavior can emerge from simple computational units interacting at scale.

Historical Evolution and Scientific Authority

Understanding the history of neural networks provides valuable context for their current prominence. Early enthusiasm for neural nets waned during periods known as AI winters, largely due to computational limitations and theoretical critiques. The von Neumann model of computing, which emphasized symbolic manipulation, dominated early artificial intelligence research.

Renewed interest emerged in the 1980s with the rediscovery of backpropagation and advances in hardware. Subsequent breakthroughs in deep learning during the 2010s cemented neural networks as a central paradigm in machine learning.

The contributions of pioneers such as Warren McCulloch, Walter Pitts, Frank Rosenblatt, and proponents of Hebbian learning continue to influence contemporary research. Their foundational ideas underpin modern neural network architectures and training methodologies.

Ethical and Practical Considerations

While artificial neural networks offer remarkable capabilities, they also present challenges. Issues related to interpretability, bias, and robustness remain active areas of research. Because neural networks operate as complex parameterized systems, understanding their internal decision-making processes can be difficult.

Efforts to improve transparency include explainable artificial intelligence techniques that aim to clarify how models arrive at specific predictions. Addressing these concerns is essential for responsible deployment in high-stakes domains such as healthcare, finance, and autonomous systems.

Future Directions of Artificial Neural Networks

The future of artificial neural networks is closely tied to ongoing research in architecture design, optimization, and integration with other learning paradigms. Hybrid models combining neural networks with symbolic reasoning and probabilistic inference are gaining attention.

Advancements in unsupervised and self-supervised learning aim to reduce reliance on labeled training data. Meanwhile, neuromorphic computing seeks to replicate the efficiency of biological neural networks at the hardware level.

As neural networks in machine learning continue to evolve, their role in artificial intelligence applications is expected to expand further, shaping how machines perceive, learn, and interact with the world.

Conclusion:

Artificial neural networks represent one of the most powerful and versatile tools in modern machine learning. Rooted in biological inspiration and refined through decades of research, ANN models provide a robust framework for solving complex computational problems. By understanding their architecture, learning process, historical development, and applications, professionals can better leverage neural networks for innovative and responsible artificial intelligence solutions.

From the early perceptron to today’s deep neural networks, the evolution of ANN reflects a broader shift toward data-driven intelligence. As research advances and applications diversify, artificial neural networks will remain central to the future of intelligent systems.

FAQs:

1. What problem do artificial neural networks solve in machine learning?

Artificial neural networks are designed to model complex, non-linear relationships in data, making them effective for tasks where traditional algorithms struggle, such as pattern recognition, prediction, and feature learning.

2. How does an artificial neural network differ from conventional algorithms?

Unlike rule-based algorithms, artificial neural networks learn directly from data by adjusting internal parameters, allowing them to adapt to new patterns without explicit reprogramming.

3. Why are hidden layers important in neural network architecture?

Hidden layers enable a neural network to extract and transform features at multiple levels of abstraction, which is essential for learning complex representations in high-dimensional data.

4. What role does backpropagation play in neural network learning?

Backpropagation provides an efficient way to compute parameter updates by distributing prediction errors backward through the network, allowing all layers to learn simultaneously.

5. How do activation functions influence neural network performance?

Activation functions introduce non-linearity into neural networks, directly affecting their learning capacity, convergence behavior, and ability to model complex data relationships.

6. In which industries are artificial neural networks most widely applied?

Artificial neural networks are widely used in industries such as healthcare, finance, manufacturing, transportation, and technology, supporting applications like diagnostics, forecasting, automation, and decision support.

7. What are the main limitations of artificial neural networks?

Key limitations include high data requirements, computational cost, limited interpretability, and sensitivity to biased or low-quality training data.