-

-

-

-

-

-

-

-

Complete UI/UX Designer Interview Preparation Guide

This comprehensive guide on UI/UX designer interview preparation explains how to confidently approach interviews by mastering portfolio presentation, UX principles, problem-solving strategies, soft skills, and … Read more

-

-

Top 20 Java Full Stack Developer Interview Questions and Answers

Designed for aspiring and experienced professionals, this article explores the top interview questions for Java full stack developers, offering clear explanations across Full stack Java … Read more

-

Java Programming Language Explained for Modern Development

This article provides a comprehensive overview of the Java programming language, highlighting its evolution, technical strengths, and real-world applications that continue to make it a … Read more

-

IT Training Institute in California for Data Science and AI Roles

This article provides a comprehensive guide to selecting an IT training institute in California, focusing on emerging technologies, skill-based learning, and long-term career readiness. How … Read more

-

Full Stack Development in 2026: Skills & Career Trends

This report explores how full stack development is evolving in 2026, highlighting the skills, technologies, and career opportunities shaping the future of modern software professionals … Read more

-

Latest AI Developments Transforming Work and Business

This article explores the most impactful recent advances in artificial intelligence and examines how technologies such as generative AI, agentic systems, and Edge AI are … Read more

-

Gemini Personal Intelligence Brings Smarter AI Assistants

This report examines Google Gemini’s new Personal Intelligence feature, its impact on AI productivity, and the privacy considerations behind personalized AI systems. Google’s Gemini AI … Read more

-

Meta Temporarily Blocks Teen Access to AI Characters

Meta has announced a temporary pause on teen access to its AI characters as the company works on a redesigned version of the feature aimed … Read more

-

-

OpenAI Practical Adoption Becomes Core Focus for 2026

OpenAI is reshaping its long-term strategy around a single objective: making advanced artificial intelligence usable, scalable, and economically relevant in real-world environments. As the company … Read more

-

Grok AI Controversy Exposes AI Safety Gaps

A closer look at how Grok’s rapid rollout and limited safeguards exposed deeper risks in AI governance, platform moderation, and responsible innovation. Concerns surrounding … Read more

-

New AI Research Breakthroughs Shaping the Future

This article provides a comprehensive overview of key AI advancements , highlighting their impact across industries, research, and career pathways. The Latest AI Breakthroughs Reshaping … Read more

-

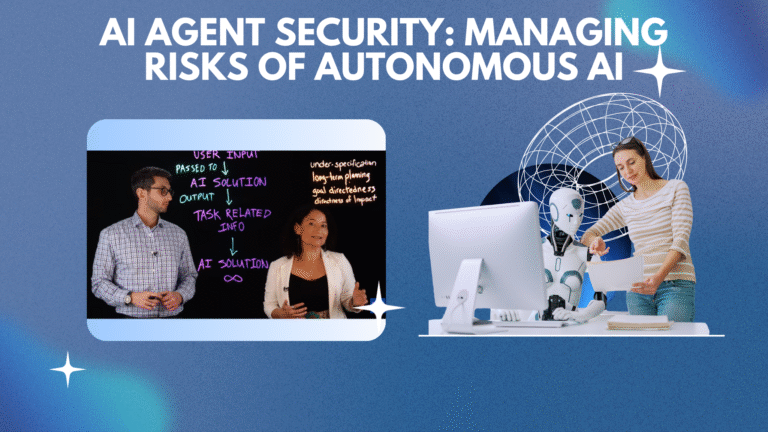

AI Agent Security: Managing Risks of Autonomous AI

As AI agents gain the ability to act independently across enterprise systems, this report explores the emerging security risks of agentic AI, why traditional defenses … Read more

-

Google AI Videomaker Flow Expands to Workspace Users

Google has expanded its AI video creation tool Flow to Workspace users, enabling businesses, educators, and enterprises to generate and edit short videos using text … Read more

-

Conversational AI Transforms Retail Analytics and Pricing

Retailers are increasingly adopting conversational AI tools to turn predictive analytics into real-time commercial decisions, reshaping how pricing, merchandising, and assortment strategies are planned and … Read more

-

Pakistan Partners with Meta for AI Teacher Training

Pakistan teams up with Meta and Atomcamp to train university faculty in artificial intelligence, aiming to modernize higher education and equip educators with the skills … Read more

-

AI in Content Writing: How Writers Use AI Tools Without Losing Their Voice

Artificial intelligence is reshaping content writing by helping writers plan, draft, and edit more efficiently, and this report explains how AI writing tools work, where … Read more

-

Australia Teen Social Media Ban Forces Meta Crackdown

Australia’s new teen social media ban is reshaping how global platforms operate, as Meta moves to enforce age-based access restrictions while raising questions about the … Read more

-

Advocacy Groups Urge Apple, Google to Act on Grok Deepfake Abuse

A growing coalition of advocacy groups is urging Apple and Google to remove X and its AI tool Grok from their app stores, warning that … Read more

-

Google Gemini AI Leads the AI Race Against OpenAI and ChatGPT

Google is emerging as the frontrunner in the global artificial intelligence race, leveraging its Gemini model, proprietary infrastructure, and vast product ecosystem to shape the … Read more

-

X Faces Scrutiny as Grok Deepfake Images Continue to Surface

Despite X’s assurances of tighter AI controls, this article examines how Grok continues to generate nonconsensual deepfake images, the growing regulatory backlash in the UK, … Read more

-

UK Deepfake Law Targets AI-Created Nudes Amid Grok Controversy

The UK is enforcing a new law that makes the creation of nonconsensual AI-generated intimate images a criminal offense, tightening platform accountability and accelerating regulatory … Read more

-

Claude Cowork Brings Practical AI Agents to Everyday Workflows

Anthropic’s latest Claude Cowork feature signals a shift toward practical AI agents that can manage files, automate tasks, and collaborate alongside users as a true … Read more

-

Google Gemini Buy Buttons Signal a New Era of AI Shopping

Google is expanding Gemini into a transactional platform, bringing AI-powered shopping, native checkout, and a new open commerce standard to AI search. Google is accelerating … Read more

-

Gmail AI Inbox Feature Could Transform How You Manage Your Inbox

Google’s new AI Inbox for Gmail reimagines email management by using artificial intelligence to generate summaries, suggest tasks, and organize messages, offering a glimpse into … Read more

-

Google Pulls AI Overviews From Medical Searches After Accuracy Concerns

Google’s decision to disable AI Overviews for certain medical searches highlights growing concerns over the accuracy, safety, and responsibility of AI-generated health information in online … Read more

-

Character.AI and Google Reach Settlement in Teen Suicide Lawsuits

This report examines how Character.AI and Google are resolving multiple lawsuits over allegations that AI chatbot interactions contributed to teen self-harm, highlighting growing legal scrutiny … Read more

-

AI Foreign Policy and National Security: Jake Sullivan on US-China Tech Risks

“Former White House adviser Jake Sullivan warns that reversing US AI export controls could reshape global technology competition and national security, highlighting the high-stakes intersection … Read more

-

Lenovo AI Glasses Concept Unveiled at CES 2026

Lenovo has unveiled a concept pair of AI-powered smart glasses at CES 2026, offering an early look at its vision for lightweight wearable technology featuring … Read more

-

Lenovo Qira AI Assistant Can Act on Your Behalf Across Devices

Lenovo’s latest CES announcement introduces Qira, a system-level, cross-device AI assistant designed to seamlessly operate across laptops and smartphones, blending on-device and cloud intelligence to … Read more

-

Nvidia Introduces the Vera Rubin Platform to Advance AI Computing

This report explores Nvidia’s early unveiling of the Vera Rubin AI computing platform at CES 2026, highlighting how its new architecture aims to deliver higher … Read more

-

-

Gemini on Google TV Update Brings Nano Banana AI and Smart Voice Features

Google’s latest Gemini on Google TV update introduces generative AI features that bring intelligent visuals, interactive voice controls, and real-time insights to smart televisions, reshaping … Read more

-

LG CLOiD Home Robot at CES: AI-Powered Laundry, Cooking, and Home Automation

At CES, LG unveils its CLOiD home robot, offering a practical look at how AI-powered robotics could transform everyday living through automated laundry, smart cooking, … Read more

-

How AI Analysis Social Media to Predict Crypto Trends

This report explores how artificial intelligence is reshaping the relationship between social media and cryptocurrency by analyzing large-scale public sentiment, digital behavior, and data-driven insights … Read more

-

Vevo Music Video Streaming Service: History, Growth & Records

This report explores Vevo’s evolution as a leading music video streaming service, detailing its history, ownership, YouTube partnership, business model, and global impact on the … Read more

-

Tumblr Social Media Platform Explained: What It Is and How It Works

This article provides a comprehensive overview of the Tumblr social media platform, examining its history, ownership changes, core features, content policies, monetization strategy, cultural impact, … Read more

-

VK (VKontakte) Platform Overview: Growth and Influence

VK (VKontakte) is Russia’s largest social media platform, and this report explores its origins, key features, ownership structure, rapid growth, and the controversies shaping its … Read more

-

Zoom Communications: From Video Meetings to AI-First Platform

Zoom Communications has evolved from a simple video conferencing tool into a global, AI-driven collaboration platform, and this article explores its company history, rapid growth, … Read more

-

How Businesses Use Generative AI Today

Generative AI is rapidly becoming a core enterprise capability, and this report explores how businesses across industries are applying AI technologies in real-world scenarios to … Read more

-

What Is Amazon? E-commerce, Cloud and AI Explained

Amazon is a global digital powerhouse that began as an online bookstore and evolved into a multi-industry leader spanning e-commerce, cloud computing, artificial intelligence, logistics, … Read more

-

Google Meet Video Conferencing: Secure Meetings for Work and Education

This article explores how Google Meet video conferencing has evolved into a secure, AI-driven collaboration platform, examining its features, underlying technology, enterprise use cases, and … Read more

-

The Evolution of the Quora Platform in the Global Knowledge Economy

This article provides a comprehensive overview of the Quora platform, covering its history, monetization strategy, user base expansion, and integration of AI-driven technologies such as … Read more

-

iMessage: Apple’s Messaging Service Explained

An in-depth look at iMessage reveals how Apple’s messaging service combines security, ecosystem control, and user experience while facing pressure from interoperability and antitrust regulations. … Read more

-

The Biggest Challenges of Artificial Intelligence Today

Artificial intelligence is rapidly transforming industries and public systems, but its widespread adoption also brings critical challenges related to data privacy, bias, transparency, ethics, and … Read more

-

Advantages of Artificial Intelligence: Real-World Applications and Benefits

Artificial intelligence is transforming industries by enabling smarter decision-making, intelligent automation, and personalized experiences, and this article explores the key advantages of artificial intelligence and … Read more

-

Working of Artificial Intelligence: From Data to Decisions

This article explains the working of artificial intelligence, examining how AI systems collect data, learn through different models, and make decisions across real-world applications. Working … Read more

-

AI in Regulatory Design and Delivery

This article explores how artificial intelligence is reshaping regulatory design and delivery by enabling data-driven policymaking, adaptive governance, smarter enforcement, and more transparent, accountable regulatory … Read more

-

AI Regulatory Landscape: Global Rules, Governance, and Compliance

This article examines the evolving AI regulatory landscape, exploring global regulations, governance frameworks, and compliance strategies that are shaping how artificial intelligence is developed, deployed, … Read more

-

AI Regulation: How Artificial Intelligence Is Governed

This article examines how AI regulation is evolving worldwide, exploring the policies, governance frameworks, and risk-based approaches shaping the responsible development and oversight of artificial … Read more

-

Cultural Impact of AI on Teams in the Modern Workplace

Artificial intelligence is no longer just transforming how work gets done—it is reshaping team dynamics, leadership styles, trust, and workplace culture, making its human impact … Read more

-

AI Bias Mitigation: Challenges, Techniques, and Best Practices

This article explores how bias emerges in artificial intelligence systems, its real-world consequences across industries, and the practical strategies organizations use to build fair, responsible, … Read more

-

Why AI Ethics in Business Matters for Trust and Growth

This article explores how AI ethics has become a strategic business imperative, shaping trust, governance, compliance, and sustainable innovation in modern enterprises. AI Ethics in … Read more

-

Research Data Sources: Open Data, Platforms, and Best Practices

This article explores the evolving landscape of research data sources, examining how open, licensed, and academic datasets—along with modern data platforms—are transforming research, decision-making, and … Read more

-

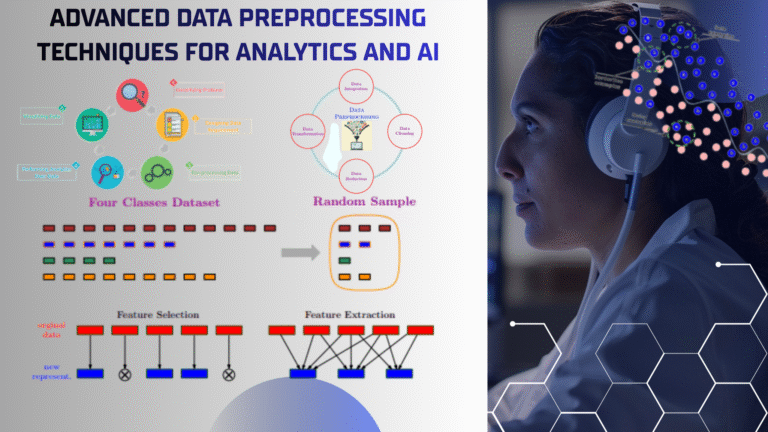

Advanced Data Preprocessing Techniques for Analytics and AI

This in-depth guide explores how data preprocessing in big data analytics transforms raw, complex data into reliable, high-quality inputs through cleaning, integration, transformation, and feature … Read more

-

What Is Data and How AI Uses It

This article explains what data is, why it matters in today’s digital economy, and how it powers decision-making, innovation, and artificial intelligence across industries. Introduction: … Read more

-

Artificial Neural Networks (ANN): A Complete Professional Guide

“This article explains artificial neural networks in a clear, technical context, examining their structure, optimization, and evolution within machine learning and artificial intelligence.” Artificial Neural … Read more

-

What Is an Algorithm? Meaning, Types, Examples, and Uses

This article offers a clear, end-to-end exploration of algorithms, explaining what they are, how they work, why they matter, and how they are used across … Read more

-

Generative Artificial Intelligence Is Reshaping Modern AI Systems

This article provides a comprehensive, professional overview of how generative artificial intelligence is transforming modern AI systems, from large language models and multimodal capabilities to … Read more

-

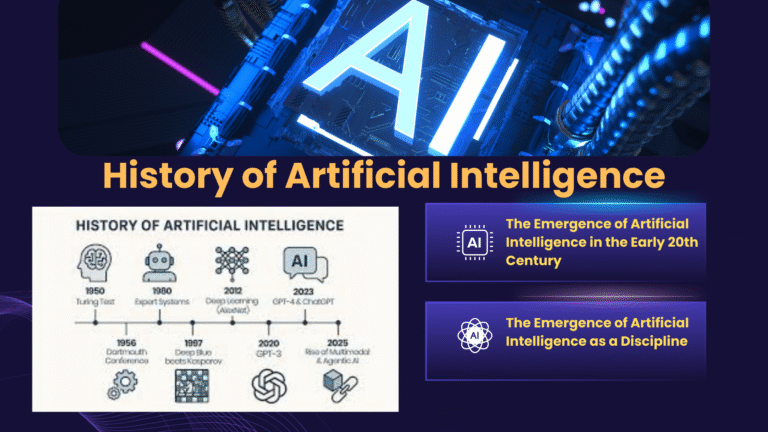

History of Artificial Intelligence: Key Milestones From 1900 to 2025

This article examines the historical development of artificial intelligence, outlining the technological shifts, innovation cycles, and real-world adoption that shaped AI through 2025. History of … Read more

-

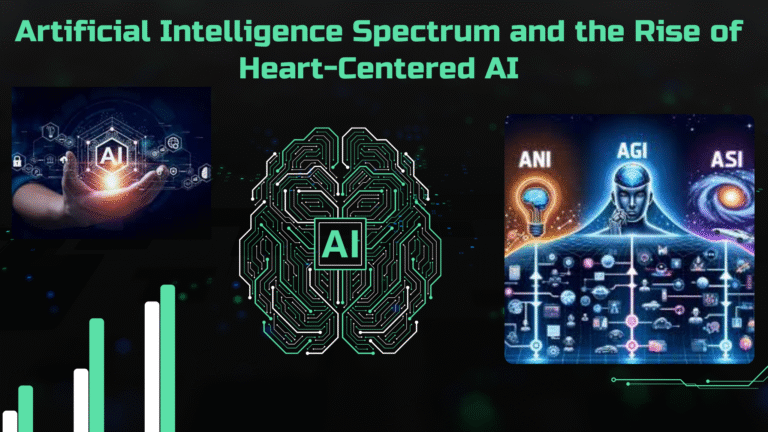

Artificial Intelligence Spectrum and the Rise of Heart-Centered AI

This article explores the artificial intelligence spectrum, tracing the evolution from narrow machine intelligence to future possibilities shaped by human cognition, ethics, and heart-centered understanding. … Read more

-

Impact of Generative AI on Socioeconomic Inequality

This piece outlines how generative AI is transforming economies and institutions, the risks it poses for widening inequality, and the policy choices that will shape … Read more

-

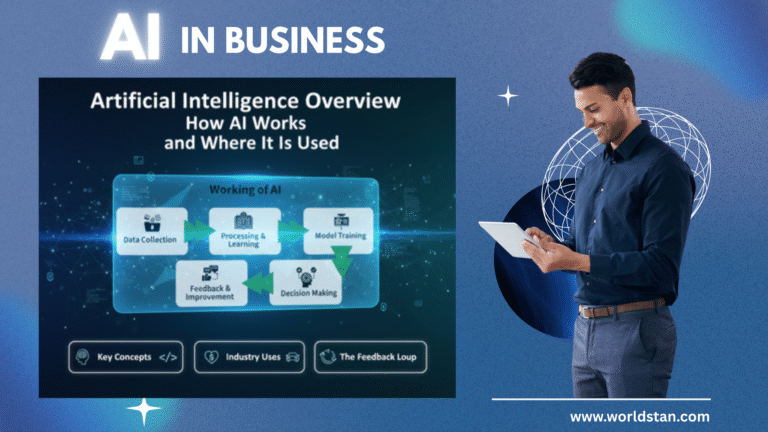

Artificial Intelligence Overview: How AI Works and Where It Is Used

This article provides a comprehensive overview of artificial intelligence, explaining its core concepts, key technologies such as machine learning, generative AI, natural language processing, and … Read more

-

-

-

MiniMax AI Foundation Models: Built for Real-World Business Use

This in-depth report explores how MiniMax AI is emerging as a key Chinese foundation model company, examining its core technologies, enterprise-focused innovations, flagship products, and … Read more

-

-

Ernie Bot 3.5 vs Global LLMs: How Baidu Is Competing in Generative AI

This report explores the launch of Baidu’s Ernie Bot 3.5, examining its technological advancements, knowledge-enhanced architecture, enterprise applications, and its growing role in reshaping the … Read more

-

Tencent Unveils Hunyuan T1: A Powerful Open-Source Reasoning AI Model

Tencent’s open-source AI strategy comes into focus as Hunyuan T1 emerges as a hybrid, reasoning-driven language model designed for enterprise-grade performance, efficient scalability, and real-world … Read more

-

Qwen 2.5 Max vs GPT-4o: How Alibaba’s New LLM Stacks Up

Alibaba Cloud’s Qwen 2.5 Max marks a major step forward in large language model development, combining efficient architecture, long-context reasoning, multimodal intelligence, and enterprise-ready design … Read more

-

-

-

iFLYTEK SPARK V4.0 Powers the Next Generation of AI Voice Technology

This report explores how iFLYTEK SPARK V4.0 is reshaping global human-computer interaction through advanced voice AI, multilingual communication, and real-world applications across education, healthcare, and … Read more

-

AI Art Made Easy with the Latest GPT Image Generator

A new-generation GPT image generator that transforms text and photos into high-quality, customizable AI visuals for every creative need. A New Standard in AI Visual … Read more

-

中國人工智慧-Chinese AI Innovations 2026-27 – Models, Chips, and Future Trends

(中國正快速崛起為全球人工智慧領域的力量,透過先進的模型、策略性的政策舉措和技術突破來推動創新,重塑產業格局、國家發展以及全球人工智慧的未來。) China is rapidly emerging as a global force in artificial intelligence, driving innovation through advanced models, strategic policy initiatives, and technological breakthroughs that are … Read more

-

高级人工智能编码-Advanced AI Coding Partners For Modern Developers

Professional Recrafted Version: The growing influence of AI-powered software development is reshaping how engineers build, review, and maintain modern applications. As part of the Generative … Read more

-

Exploring AI Applications in Daily Life

Artificial intelligence has quietly integrated into our daily routines, and this overview explores how AI is shaping everyday life through practical applications across technology, business, … Read more

-

The Real Advantages and Disadvantages of AI You Need to Know Today

This article explores the key advantages and disadvantages of artificial intelligence, offering a clear understanding of how AI shapes modern life, business operations, and future … Read more

-

Making AI Accessible to Everyone-免費線上人工智慧

A simple and open platform that brings powerful, free, and unlimited AI tools to everyone—anytime, anywhere, without registration or cost. Free AI Online (免費線上人工智慧) – … Read more

-

免費人工智慧程式碼 Writer for Everyone AI Code

A quick overview of how FREE AI ONLINE’s new free coding assistant empowers students and learners by generating code instantly and simplifying the entire programming … Read more

-

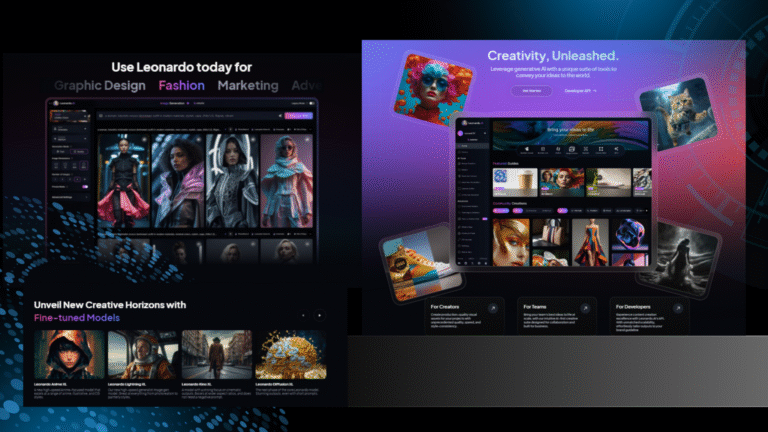

How Leonardo.Ai Empowers Creators with Smart AI Design and Marketing Solutions

Discover how Leonardo.Ai is revolutionizing the world of marketing and design by blending human creativity with generative AI—empowering creators, brands, and businesses to produce professional, … Read more

-

What Is AI Product Design? Tools, Benefits and Real-World Applications

Discover how AI product design is revolutionizing the way modern products are imagined, built, and refined—merging human creativity with intelligent automation to create smarter, faster, … Read more

-

Pocket AI Thought Companion Features, Benefits and Real-World Use Cases

A new generation of AI productivity devices is emerging, and Pocket leads this shift by offering a screen-free companion designed to capture, organize, and clarify … Read more

-

-

Xiaohongshu China: Where Lifestyle, Influence, and Commerce Meet

Xiaohongshu, also known as Little Red Book, is China’s rapidly evolving social commerce platform that blends lifestyle sharing, influencer culture, and e-commerce innovation—this article explores … Read more

-

-

What Is Imo App? Everything About Its Launch, Developer and Key Functions

When was imo launched? Who developed imo? What is the primary function of imo? How many messages are sent through imo per day? What makes … Read more

-

Ideogram AI: The Future of Text to Image Generation

This article examines the evolution of Ideogram AI, a pioneering text-to-image generation platform that merges artificial intelligence with creative design, exploring its history, key model … Read more

-

Midjourney AI Web Interface and Tools

This report explores the rise of Midjourney AI, a leading generative art platform that blends technology and creativity, tracing its development, features, controversies, and its … Read more

-

Discover the Best AI Apps : From ChatGPT and Claude to Gemini and Grok

Explore how artificial intelligence is reshaping the mobile landscape through powerful apps that simplify daily life, enhance creativity, and redefine productivity across every category — … Read more

-

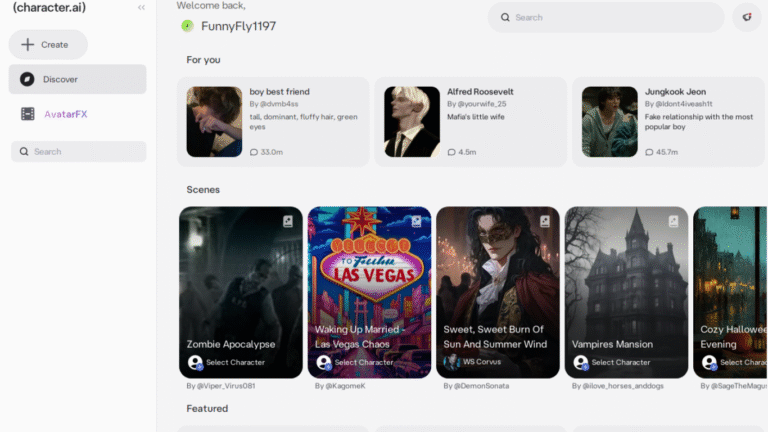

Character AI Chatbot: The Rise of a Generative AI Pioneer

This report explores the evolution of Character AI — from its Google-engineered origins and billion-dollar rise to its innovative features, safety challenges, and growing impact … Read more

-

Perplexity AI vs ChatGPT vs Gemini: Who Wins?

Perplexity AI stands at the crossroads of innovation and controversy — a next-generation search engine redefining how humans interact with information while sparking debates over … Read more

-

Grubby AI vs ChatGPT: The truth about AI Humanizer

This review explores the effectiveness of AI humanization tools like Grubby AI and ChatGPT, revealing their strengths and limitations in creating natural-sounding, AI-generated content capable … Read more

-

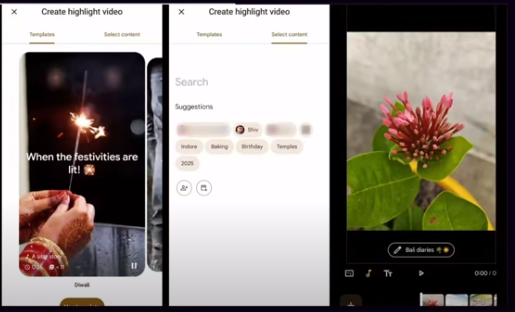

A New Era for Google Photos AI and Android XR

Google Photos is stepping into a new era powered by AI and immersive technology. The platform is evolving beyond simple photo storage, introducing smart editing, … Read more

-

YouTube AI: How the platform is using artificial intelligence

Over recent years, YouTube has integrated many AI / generative-AI features into its platform — for content creation, discovery, moderation, accessibility, and safety. These tools … Read more

-

-

Elon Musk’s xAI Lays Off 500 Workers in Major Restructuring

In a sweeping move that signals a new direction for Elon Musk’s artificial intelligence company, xAI has laid off around 500 workers from its data … Read more

-

-

What is Talkie AI App? A Complete Guide

In today’s fast-moving digital world, AI chat apps are everywhere, offering instant replies, voice features, and even lifelike conversations. One platform that caught global attention … Read more

-

“What Have We Created?”: OpenAI’s Sam Altman Admitted

“What Have We Created?”: OpenAI’s Sam Altman Admits He’s Scared of ChatGPT’s Next Upgrade In a rare and candid moment, OpenAI CEO Sam Altman has … Read more

-

Genius Apero App Vietnam: Success Story of a Global All-in-One Mobile Tool

The Genius Apero app is more than just another tool on your phone—it is a powerful, lightweight, and multifunctional mobile application that has become a … Read more

-

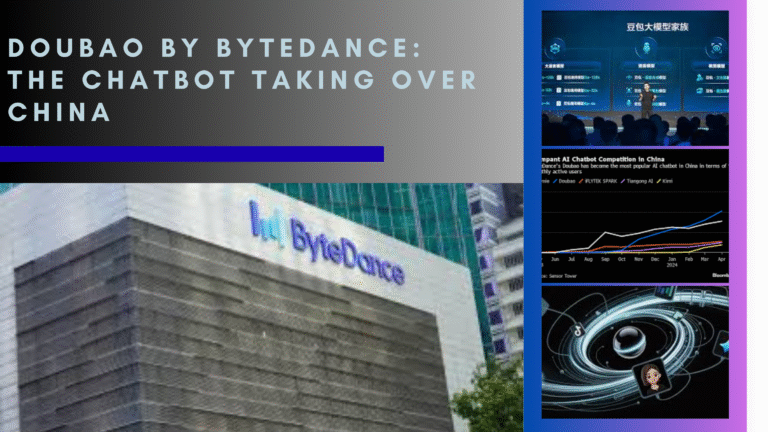

腾讯元宝 (Tencent Yuanbao): The AI Super App Challenging ChatGPT in 2025

Artificial intelligence is reshaping how people work, learn, and communicate. In this fast-moving field, 腾讯元宝 (Tencent Yuanbao) has quickly gained attention as one of … Read more

-

Novaapp.ai Reviews: Nova HubX Turkey Rise, Claims and Warnings

Novaapp.ai and Nova AI are being widely discussed, but not always for good reasons. In this article, you’ll discover novaapp.ai reviews, claims made by the … Read more

-

Cloudwalk Technology: Rise of a Chinese AI Powerhouse

Cloudwalk, often written as CloudWalk Technology or cloud walk, has emerged as one of the most influential players in China’s artificial intelligence revolution. Headquartered in … Read more

-

Cambricon Technologies: China’s AI Chip Powerhouse

Introduction: The Rise of Cambricon: Cambricon, also known as Cambricon Technologies, is one of the most influential Chinese AI chip companies driving innovation in artificial … Read more

-

-

Ubtech Humanoid Robots: Innovation, Education, and the Future of AI-Powered Machines

Ubtech has become one of the most recognized names in the robotics industry, transforming from a small startup into a global leader. Founded in Shenzhen, … Read more

-

-

-

-

Quark – A Smart Information Hub for Young Users in China (Alibaba China)

Empowering the Next Generation with AI-Driven Tools: Quark has emerged as a go-to digital platform for young users in China, offering a powerful combination of … Read more

-

Quark AI – Alibaba’s Flagship AI-Powered Information Platform

Serving Over 200 Million Users in China: Quark AI – Alibaba’s Flagship AI-Powered Information Platform: Quark AI, developed by Alibaba Group, has evolved into a … Read more

-

ChatGPT: History, Features, Founders and Future of OpenAI’s AI Assistant (2025 Guide)

Introduction to ChatGPT: ChatGPT is a smart virtual assistant built by OpenAI, a leading artificial intelligence company. Launched on November 30, 2022, ChatGPT was designed … Read more

-

-

-

-

Social Media Is Reason Of Social Change

Social Media, social change, technology, youth, problems, behavior Today’s World: Social media has become a powerful tool in today’s world, shaping how we communicate and … Read more

-

Social Media Marketing (SMM) – Benefits of SMM

Social Media Marketing (SMM): What It Is, How It Works, Pros and Cons Introduction Social media marketing (SMM), also known as digital marketing and e-marketing, … Read more

-

Twitch Application (TwichCon)

When was Twitch Application officially launched as a spin-off of Justin.tv? Who were the founders of Twitch? What was the original purpose of Justin.tv? What … Read more

-

Viber / Rakuten Viber

What is the overview of Rakuten Viber? Who founded Viber Media? When was Rakuten Viber acquired by Rakuten? What platforms is Rakuten Viber accessible on? … Read more

-

-

-

-

-

-

Baidu Tieba

What is Baidu Tieba and who created it? Can you explain the primary focus of Baidu Tieba? How many bars does Baidu Tieba have, and … Read more

-

Introduction to SnackVideo – features of snackvideo

1- What is the parent company behind SnackVideo’s development and ownership? 2- How does SnackVideo’s Chinese origin influence its operations and content? 3- What sources … Read more

-

Josh

What major accomplishment did VerSe Innovation achieve within three months of Josh’s launch in December 2020? Who were some notable backers that supported VerSe Innovation’s … Read more

-

Microsoft Teams

Can you name some milestones in the history of Microsoft Teams’ development? How did Microsoft respond to competition from platforms like Slack in the … Read more

-

-

Douyin what is it – An Introduction

How has Douyin’s user base grown since its inception in 2016, and what implications does this have for its influence? What role has Zhang Yiming’s … Read more

-

-

-

-

-

TikTok App

How has TikTok App Evolution shaped the landscape of social media platforms? What factors contribute to User Engagement levels on TikTok App? What strategies have … Read more

-

-

-

-

How Reddit Shaped Online Communities: History, Challenges, and Achievements

When was Reddit established and where is it headquartered? What is Reddit’s user base and what is it known for? Who are the co-founders of … Read more

-

Kuaishou Technology: The Rise of China’s Leading Short Video Platform

1. What factors do you think contributed most significantly to Kuaishou’s rapid emergence as a cornerstone in short-form video and social media engagement? 2.How do … Read more

-

Youtube Story

1. When was YouTube officially launched, and what type of content was it mainly used for? 2. Which tech giant bought YouTube, and in what … Read more

-

-

Forums Social Media

Which forums social media are currently considered among the top in the digital landscape? What distinct features and purposes characterize each of these leading forums … Read more

AI

“AI is no longer an experiment it’s the engine powering modern technology, transforming tools, platforms, and consumer experiences at a global scale.”

No posts