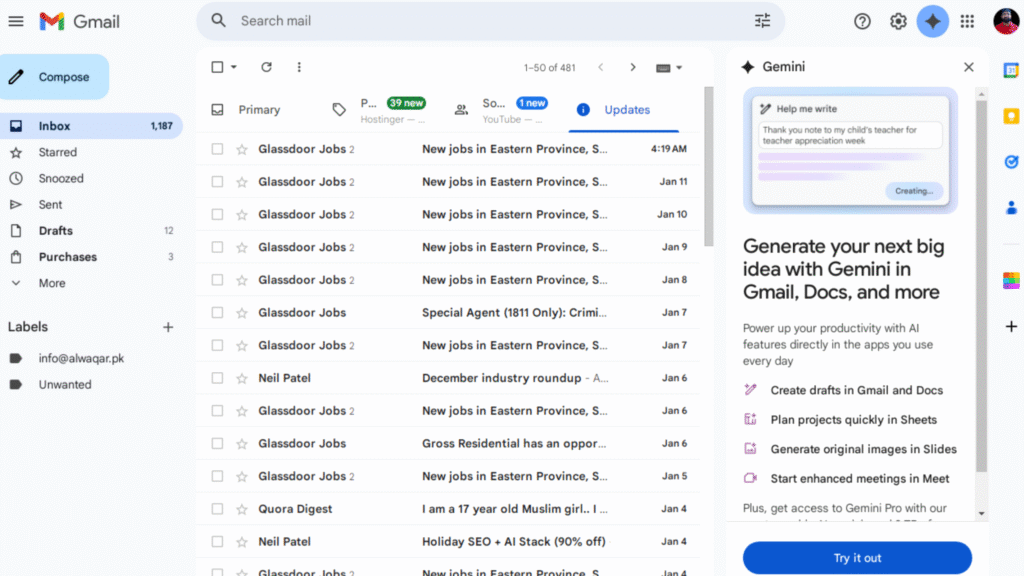

Understanding What Google’s AI Inbox Actually Is

At its core, the AI Inbox Gmail feature is not simply a cosmetic redesign. It represents a conceptual shift away from email as a static archive toward email as a dynamic task and information hub. Rather than displaying messages as individual units, the AI inbox view synthesizes content across multiple emails and presents it as digestible summaries and suggested actions.

When enabled, the traditional Gmail inbox is replaced by an AI-generated overview page. This page highlights suggested to-dos derived from message content, followed by broader topics that the system believes the user should review. Each suggestion links back to the original email, allowing users to dive deeper or respond directly if needed.

This approach positions Gmail less as a mailbox and more as an intelligent assistant that interprets communication on the user’s behalf. Google AI email tools are increasingly focused on reducing cognitive load, and the AI Inbox represents one of the most ambitious applications of that philosophy to date.

Limited Access and Early Testing Conditions

Currently, Google’s AI Inbox is available only to a small group of trusted testers. It is limited to consumer Gmail accounts and does not yet support Workspace users, who arguably represent the most demanding email audience. This restriction highlights the experimental nature of the feature and suggests that Google is proceeding cautiously before rolling it out at scale.

As with many experimental Gmail features, the current version may not reflect the final product. Early testers are effectively interacting with a prototype that is still learning how to interpret diverse inbox behaviors. This context is important when evaluating both the strengths and shortcomings of the AI Inbox Gmail experience.

Google has historically used limited testing phases to refine major Gmail updates, and the AI Inbox is likely to undergo significant iteration based on user feedback, performance metrics, and real-world usage patterns.

How AI-Generated Summaries Change Email Consumption

One of the most noticeable aspects of the AI Inbox is its reliance on AI-generated email summaries. Instead of reading each message individually, users are presented with condensed interpretations of content across multiple emails. These summaries aim to capture key points, deadlines, and requests without requiring users to open each message.

For users with high-volume inboxes, this approach could dramatically reduce time spent scanning emails. AI-based email organization allows the system to cluster related messages and surface the most relevant information first. In theory, this enables faster decision-making and more efficient inbox zero strategies.

However, summarization also introduces questions of accuracy and trust. Subtle nuances in tone, intent, or urgency can be lost when messages are condensed. While Google AI productivity tools have improved significantly, email remains a domain where small details can have outsized consequences.

Suggested To-Dos and Task-Oriented Email Design

Another defining feature of the AI Inbox for Gmail is its emphasis on actionable insights. Suggested to-dos appear prominently at the top of the inbox, encouraging users to treat email as a task list rather than a passive stream of messages.

These AI-generated tasks are based on inferred intent within emails, such as requests for responses, reminders to review documents, or time-sensitive notifications. By elevating these items, Gmail attempts to bridge the gap between communication and productivity tools.

This task-centric design aligns with broader trends in AI productivity software, where systems aim to reduce friction between information intake and action. Rather than requiring users to manually convert emails into tasks, the AI inbox view attempts to do that work automatically.

Still, this approach raises questions about user control. Not all users want their inbox to dictate their task priorities, and some may prefer the autonomy of deciding what deserves attention.

Topic Grouping and Contextual Awareness

Beyond individual to-dos, the AI Inbox organizes emails into topics that the system believes are worth reviewing. These topic clusters might include newsletters, ongoing conversations, financial updates, or recurring subscriptions.

This form of AI-driven email tools introduces contextual awareness into inbox management. Instead of treating each email as an isolated event, the system recognizes patterns and relationships over time. For users who receive frequent updates from the same sources, this could reduce redundancy and improve comprehension.

Topic grouping also reflects Google’s broader investment in contextual AI across its products. Similar principles are already visible in Google Search, Docs, and Calendar, where AI attempts to understand not just content, but intent and relevance.

Inbox Zero Meets Artificial Intelligence

For users who already maintain disciplined inbox zero systems, the AI Inbox Gmail experience presents an interesting paradox. On one hand, AI-powered inbox management promises to make inbox zero easier by highlighting what matters most. On the other hand, it introduces an additional interpretive layer that may not align with established personal workflows.

Users who prefer strict manual control may find the AI inbox view unnecessary or even intrusive. For these individuals, the traditional chronological list offers clarity and predictability that AI summaries cannot fully replicate.

This tension highlights an important truth about AI email management tools: effectiveness is highly subjective. What feels transformative for one user may feel redundant or disruptive for another.

Consumer Gmail Accounts Versus Professional Workflows

The current limitation of the AI Inbox to consumer Gmail accounts is notable. Personal inboxes tend to have lower volume and more predictable patterns than professional ones. Newsletters, personal reminders, and transactional emails are easier for AI systems to interpret than complex workplace communication.

Professional inboxes often involve ambiguous requests, layered conversations, and sensitive information that may challenge AI-based summarization. Until the AI Inbox is tested within Workspace environments, its suitability for enterprise use remains uncertain.

That said, Google’s decision to start with consumer Gmail suggests a strategy of gradual learning. By refining the system in simpler contexts, Google can improve accuracy before introducing it to higher-stakes professional settings.

Privacy, Trust, and AI Interpretation

Any discussion of AI-driven inbox view features must address privacy considerations. Gmail already processes email content for spam detection, categorization, and smart features, but deeper AI interpretation may heighten user concerns.

The AI Inbox relies on analyzing message content to generate summaries, tasks, and topics. While this processing occurs within Google’s existing infrastructure, users may still question how their data is being used and stored.

Trust is central to adoption. For the AI Inbox Gmail feature to succeed, users must believe that the system is not only accurate but also respectful of privacy boundaries. Transparent communication from Google about how AI email management tools operate will be critical.

Design Philosophy and the Future of Gmail

The AI Inbox is as much a design experiment as it is a technical one. By reimagining the inbox as an overview dashboard, Google is challenging long-standing assumptions about how email should look and function.

This redesign aligns with a broader trend toward proactive software. Instead of waiting for user input, systems increasingly anticipate needs and surface relevant information automatically. Gmail’s AI inbox view represents a clear step in that direction.

If successful, this approach could influence not only Gmail but email clients across the industry. Competitors may adopt similar AI-driven inbox organization strategies, accelerating a shift away from purely chronological email displays.

Why the AI Inbox May Not Be for Everyone

Despite its potential, the AI Inbox for Gmail is unlikely to appeal universally. Some users value the simplicity and transparency of a traditional inbox. Others may distrust automated prioritization or prefer to process emails manually.

Additionally, early versions of experimental Gmail features often struggle with edge cases. Misinterpreted emails, missed tasks, or irrelevant topic groupings could frustrate users and undermine confidence in the system.

The success of the AI Inbox will depend on how well Google balances automation with user agency. Providing customization options and clear explanations for AI decisions may help bridge this gap.

What This Means for the Evolution of Email

The introduction of Google AI Inbox for Gmail reflects a broader shift in how digital tools are evolving. As AI productivity tools become more capable, the role of software is moving from passive storage to active assistance.

Email, long criticized for inefficiency, may benefit significantly from this transformation. AI-generated summaries, task extraction, and contextual grouping address many of the pain points users associate with inbox overload.

However, the path forward will require careful design, ongoing refinement, and responsiveness to user feedback. Email is deeply personal, and any attempt to reshape it must respect diverse preferences and workflows.