This article examines how AI regulation is evolving worldwide, exploring the policies, governance frameworks, and risk-based approaches shaping the responsible development and oversight of artificial intelligence.

AI Regulation in a Transforming World: How Artificial Intelligence Can Be Governed Responsibly

Artificial intelligence is no longer a future concept confined to research laboratories or speculative fiction. It is embedded in everyday decision-making systems, from credit scoring and medical diagnostics to content moderation and autonomous vehicles. As AI systems gain autonomy, scale, and influence, societies across the world are grappling with a critical question: how can AI be regulated in a way that protects public interest without stifling innovation?

AI regulation has become a defining policy challenge of the digital age. Unlike earlier technologies, artificial intelligence evolves dynamically, learns from vast datasets, and can produce outcomes that even its creators cannot always predict. This reality demands a rethinking of traditional technology regulation models. Effective AI governance must address not only technical risks, but also ethical, legal, economic, and societal implications.

This report examines how regulating artificial intelligence can be approached through outcome-based frameworks, risk management strategies, and international cooperation. It explores emerging AI laws, oversight mechanisms, and governance best practices, while analyzing the challenges of AI regulation in a rapidly changing technological environment.

Understanding the Need for AI Regulation

The case for artificial intelligence regulation is grounded in the scale and impact of AI-driven decisions. Algorithms increasingly influence who gets hired, who receives loans, which content is amplified online, and how public resources are allocated. When these systems fail, the consequences can be widespread, opaque, and difficult to reverse.

AI risks and harms can manifest in multiple ways. Bias in AI systems may reinforce discrimination. Deepfakes can undermine democratic processes and public trust. Automated decision systems may deny individuals access to essential services without meaningful explanation or recourse. These risks are amplified when AI systems operate at scale across borders and industries.

AI regulation seeks to establish guardrails that ensure responsible AI use while enabling innovation. The goal is not to halt technological progress, but to align it with societal values, human rights, and consumer protections. Effective AI policy provides clarity for developers, safeguards for users, and accountability mechanisms for those deploying AI systems.

The Unique Challenges of Regulating Artificial Intelligence

Regulating AI presents challenges that differ significantly from those associated with earlier technologies. Traditional laws are often static, while AI systems evolve continuously through updates, retraining, and emergent behavior. This mismatch complicates enforcement and compliance.

One of the most persistent AI regulation challenges is definitional ambiguity. Artificial intelligence encompasses a broad spectrum of systems, from simple rule-based automation to complex general-purpose AI models. Crafting AI laws that are precise yet flexible enough to cover this diversity is an ongoing struggle for policymakers.

Another challenge lies in opacity. Many advanced AI models operate as black boxes, making it difficult to assess AI transparency, traceability, and testability. Without insight into how decisions are made, assigning AI accountability or liability becomes problematic. Regulators must therefore balance technical feasibility with legal expectations.

Finally, AI regulation must contend with jurisdictional fragmentation. AI systems often operate globally, while laws remain national or regional. This creates inconsistencies in AI compliance requirements and raises questions about enforcement, cross-border liability, and regulatory arbitrage.

From Rules to Outcomes: A Shift in Regulatory Philosophy

One emerging approach to AI regulation emphasizes outcomes rather than prescriptive technical rules. Outcome-based AI regulation focuses on the real-world impact of AI systems instead of dictating specific design choices.

Under this model, regulators assess whether an AI system causes harm, violates rights, or creates unacceptable risks, regardless of how it is technically implemented. This approach allows flexibility for innovation while maintaining accountability for societal impact. Regulating AI based on impact is particularly relevant for rapidly evolving general-purpose AI systems.

Outcome-based frameworks also support proportionality. Low-risk applications, such as AI-powered photo enhancement tools, may face minimal oversight, while high-risk systems used in healthcare, policing, or financial services are subject to stricter requirements. This tiered approach recognizes that not all AI systems pose the same level of risk.

Risk-Based AI Regulatory Frameworks

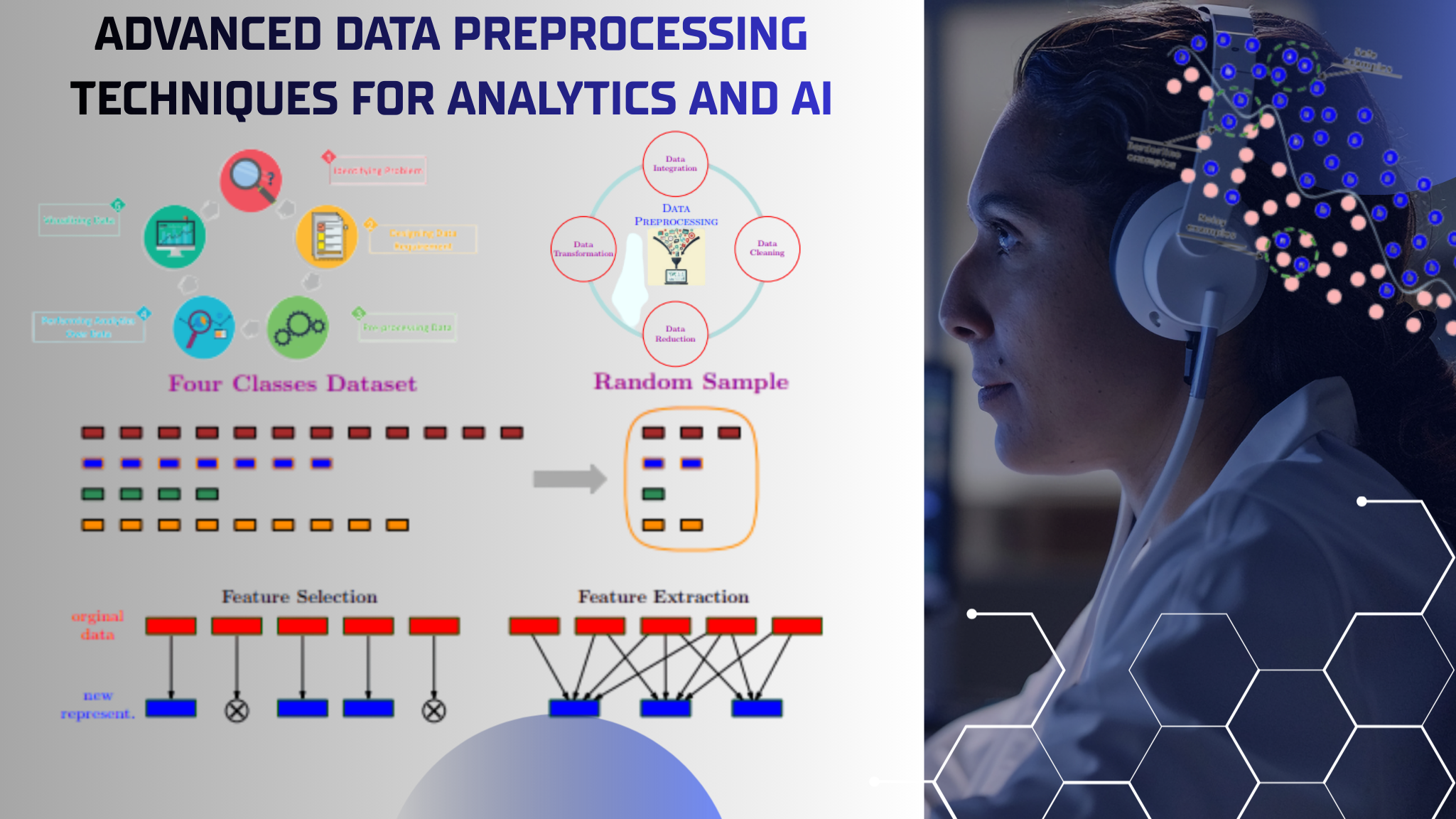

Risk-based regulation has emerged as a central pillar of modern AI governance. These frameworks classify AI systems according to their potential risks and assign obligations accordingly. AI risk management becomes a continuous process rather than a one-time compliance exercise.

High-risk AI systems typically require rigorous testing, documentation, and monitoring. This includes ensuring AI testability, validating training data, and implementing safeguards against bias and system failures. Developers and deployers may be required to conduct impact assessments and maintain audit trails to support AI traceability.

Lower-risk systems may face lighter requirements, such as transparency disclosures or voluntary codes of conduct. This graduated approach helps allocate regulatory resources effectively while reducing unnecessary burdens on innovation.

Transparency, Accountability, and Trust

Public trust is a cornerstone of sustainable AI adoption. Without confidence in how AI systems operate, individuals and institutions may resist their deployment, regardless of potential benefits. AI transparency plays a critical role in building that trust.

Transparency does not necessarily mean revealing proprietary algorithms. Rather, it involves providing meaningful explanations of AI decision-making processes, limitations, and potential risks. Users should understand when they are interacting with an AI system and how decisions affecting them are made.

AI accountability frameworks complement transparency by clarifying who is responsible when AI systems cause harm. Accountability mechanisms may include human oversight requirements, internal governance structures, and clear lines of responsibility across the AI lifecycle. Without accountability, transparency alone is insufficient.

Liability and Legal Responsibility in AI Systems

AI liability remains one of the most complex aspects of AI regulation. When an AI system causes harm, determining responsibility can involve multiple actors, including developers, data providers, system integrators, and end users.

Traditional liability models are often ill-suited to AI-driven harms, particularly when systems exhibit emergent behavior not explicitly programmed by humans. Policymakers are exploring new approaches that distribute liability based on control, foreseeability, and risk allocation.

Clear AI liability rules can also incentivize safer design practices. When organizations understand their legal exposure, they are more likely to invest in robust testing, monitoring, and governance. Liability frameworks thus function as both corrective and preventive tools within AI oversight regimes.

Ethical AI Regulation and Responsible Use

Ethical considerations are integral to artificial intelligence regulation. Ethical AI regulation seeks to embed principles such as fairness, non-discrimination, human autonomy, and respect for privacy into enforceable standards.

Responsible AI use extends beyond compliance with laws. It involves organizational cultures that prioritize long-term societal impact over short-term gains. Many AI governance best practices emphasize ethics committees, stakeholder engagement, and continuous evaluation of ethical risks.

However, ethical principles alone are insufficient without enforcement. Translating ethical commitments into measurable requirements is one of the central challenges of AI regulation. This requires collaboration between technologists, ethicists, lawyers, and policymakers.

AI Training Data and System Integrity

Training data is the foundation of most AI systems, and its quality directly influences outcomes. AI training data regulation addresses issues such as data provenance, representativeness, and consent.

Poor-quality or biased datasets can lead to discriminatory outcomes, undermining both system performance and public trust. Regulatory approaches increasingly emphasize documentation of data sources, processes for bias mitigation, and mechanisms for correcting errors.

Data governance is also closely linked to AI safety and governance. Secure data handling, protection against data poisoning, and safeguards for sensitive information are essential components of responsible AI deployment.

General-Purpose AI and Emerging Risks

General-purpose AI systems present unique regulatory challenges due to their adaptability across multiple domains. Unlike narrow AI applications, these systems can be repurposed in ways not anticipated by their creators.

General-purpose AI regulation must therefore account for downstream uses and potential misuse. This includes risks associated with deepfakes, automated misinformation, and large-scale manipulation. Deepfakes and AI regulation have become particularly urgent as synthetic media grows increasingly realistic and accessible.

Regulators are exploring obligations for developers of general-purpose AI to assess and mitigate foreseeable risks, even when specific applications are determined by third parties.

National and Regional AI Regulation Frameworks

AI regulation frameworks by country vary significantly, reflecting different legal traditions, economic priorities, and cultural values. While some regions emphasize innovation incentives, others prioritize precaution and consumer protection.

The European Union has pioneered a comprehensive risk-based approach, introducing AI regulation tiers that classify systems according to their potential harm. This model emphasizes conformity assessments, transparency obligations, and strong enforcement mechanisms.

Canada has pursued algorithm regulation focused on accountability and impact assessments, particularly within the public sector. Other jurisdictions are adopting hybrid models that combine voluntary guidelines with binding requirements.

Despite these differences, convergence is gradually emerging around core principles such as risk proportionality, transparency, and accountability.

AI Governance Within Organizations

Effective AI governance extends beyond government regulation. Organizations deploying AI systems must establish internal frameworks to manage risks, ensure compliance, and uphold ethical standards.

AI governance best practices include clear policies on system development and deployment, defined roles for oversight, and ongoing monitoring of system performance. Internal audits and third-party assessments can enhance AI accountability and traceability.

Governance is not a one-time exercise. As AI systems evolve, governance structures must adapt. This need for continuous oversight underscores the concept of eternal vigilance in AI regulation.

Consumer Rights and Societal Impact

AI regulation is ultimately about protecting people. AI and consumer rights are increasingly central to regulatory debates, particularly in contexts where automated decisions affect access to essential services.

Individuals should have the right to understand, challenge, and seek redress for AI-driven decisions that impact them. These protections help balance power asymmetries between large technology providers and users.

Beyond individual rights, AI regulation must consider broader societal impact. This includes effects on labor markets, democratic institutions, and social cohesion. AI regulation and societal impact assessments can help policymakers anticipate and mitigate systemic risks.

Technology Regulation Models and Lessons Learned

Historical approaches to technology regulation offer valuable lessons for AI policy. Overly rigid rules can stifle innovation, while laissez-faire approaches may allow harms to proliferate unchecked.

Successful technology regulation models often combine clear standards with adaptive mechanisms. Regulatory sandboxes, for example, allow experimentation under supervision, enabling learning without exposing the public to undue risk.

AI regulation benefits from similar flexibility. Adaptive frameworks that evolve alongside technology are better suited to managing long-term risks than static rules.

The Role of Oversight and Enforcement

AI oversight is essential to ensure that regulatory frameworks translate into real-world protections. Without enforcement, even well-designed AI laws risk becoming symbolic.

Oversight mechanisms may include dedicated regulatory bodies, cross-sector coordination, and international cooperation. Given the global nature of AI, information sharing and harmonization of standards are increasingly important.

Enforcement should also be proportionate. Excessive penalties may discourage innovation, while weak enforcement undermines credibility. Striking the right balance is a central challenge of AI regulation.

The Path Forward: Regulating AI in a Dynamic Landscape

AI regulation is not a destination but an ongoing process. As artificial intelligence continues to evolve, so too must the frameworks that govern it. Policymakers, industry leaders, and civil society all have roles to play in shaping responsible AI futures.

Future AI policy will likely emphasize outcome-based approaches, continuous risk assessment, and shared responsibility. Advances in AI transparency and testability may enable more effective oversight, while international collaboration can reduce fragmentation.

Ultimately, the success of AI regulation depends on its ability to protect public interest while fostering innovation. By focusing on impact, accountability, and trust, societies can harness the benefits of artificial intelligence while managing its risks.

Conclusion:

AI regulation has emerged as one of the most consequential governance challenges of the modern era. Regulating artificial intelligence requires new thinking that moves beyond traditional legal frameworks and embraces adaptability, proportionality, and ethical responsibility.

Through risk-based and outcome-focused approaches, AI governance can address emerging threats such as bias, system failures, and deepfakes while supporting beneficial innovation. Transparency, accountability, and liability are essential pillars of this effort, reinforcing public trust in AI systems.

As artificial intelligence continues to shape economies and societies, effective AI regulation will determine whether this technology serves as a force for shared progress or unchecked disruption. The path forward demands vigilance, collaboration, and a commitment to aligning AI with human values.

FAQs:

1. What is the primary goal of AI regulation?

The primary goal of AI regulation is to ensure that artificial intelligence systems are developed and used in ways that protect public safety, fundamental rights, and societal interests while still allowing innovation to progress.

2. Why is regulating artificial intelligence more complex than regulating traditional software?

AI systems can learn, adapt, and behave unpredictably based on data and context, making it harder to define static rules and assign responsibility compared to conventional, deterministic software.

3. How do governments determine which AI systems require strict oversight?

Most regulatory frameworks classify AI systems based on risk and impact, with stricter oversight applied to systems that influence critical areas such as healthcare, finance, law enforcement, or public services.

4. What role does transparency play in AI governance?

Transparency enables users, regulators, and affected individuals to understand how AI systems operate, identify potential risks or biases, and hold organizations accountable for AI-driven decisions.

5. How does AI regulation address bias and discrimination?

AI regulation addresses bias by requiring better data governance, testing for discriminatory outcomes, and implementing safeguards that reduce the risk of unfair or unequal treatment by automated systems.

6. Are companies legally responsible when AI systems cause harm?

Liability rules are evolving, but many AI laws aim to clarify responsibility by assigning legal accountability to developers, deployers, or operators based on their level of control over the system.

7. Will AI regulation slow down innovation?

Well-designed AI regulation is intended to support sustainable innovation by reducing risks, increasing public trust, and providing clearer rules that help organizations deploy AI responsibly.