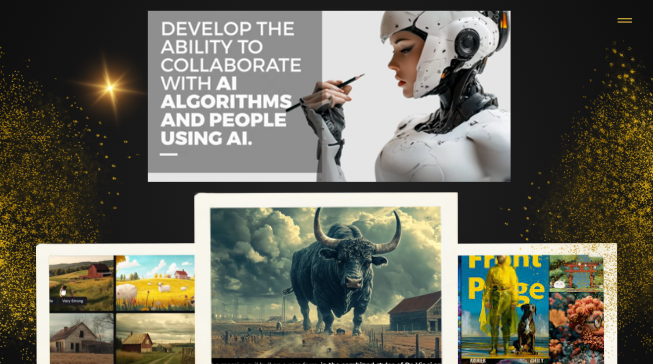

A new-generation GPT image generator that transforms text and photos into high-quality, customizable AI visuals for every creative need.

A New Standard in AI Visual Creation: Advanced GPT Image Generator Redefines Digital Art

The rapid evolution of artificial intelligence has transformed the way creators, designers, and everyday users bring their ideas to life. With the rise of modern GPT image generators, AI-powered visuals have become more accurate, accessible, and creatively flexible than ever before. Fotor’s latest GPT-driven image generation technology sets a new benchmark, offering high-quality AI visuals for both personal and professional use with unparalleled ease.

OpenAI-Powered Text-to-Image Generation

Fotor’s GPT image generator is built on powerful OpenAI-supported text-to-image technology designed for accurate prompt interpretation and high-definition visual output. Users simply enter their concept into the text box, choose an image style and format, and generate royalty-free AI visuals that match the tone, details, and aesthetic described. The system is engineered to deliver consistent results that align closely with the user’s creative direction.

Transform Images Instantly with AI Style Transfer

Beyond text-to-image creation, the tool features an advanced image-to-image engine that converts existing photos into new artistic styles without requiring any additional prompts. With a wide range of AI filters and automated style transformation options, users can effortlessly create cinematic, cartoon, anime, watercolor, concept art and more. This prompt-free enhancement allows anyone to produce striking visuals in seconds.

Unlimited Access to High-Quality AI Outputs

While many platforms impose restrictions—particularly for free-tier users—Fotor’s GPT image generator removes these limitations. Users receive free credits for AI image generation, with the ability to produce multiple image variations from a single prompt. The system supports both text-based creation and unlimited style conversions, making it one of the most flexible AI image generators available today.

Explore Diverse Image Styles and Artistic Effects

The tool offers an extensive catalog of artistic styles, ensuring creators can experiment with different aesthetics. From realistic depictions to anime, sketch, watercolor, fantasy art, Studio Ghibli-inspired visuals, plush monster themes, and action-figure effects, the generator provides endless inspiration. These styles empower users to conceptualize unique artistic identities and design original AI art that stands out.

High-Resolution Outputs for Any Creative Project

Whether for branding, marketing, digital art, or printed media, the GPT image generator delivers crisp, vivid, and professional-grade visuals. Users can download their AI-created images in high resolution and apply them directly to posters, book covers, website graphics, marketing campaigns, or personal artwork with confidence.

Create Personalized AI Avatars and Profile Pictures

For social media creators, influencers, and community managers, the tool offers an easy way to design exclusive AI avatars. From realistic portraits to anime, cartoon, pop art, and stylized designs, users can generate unique profile images that elevate their presence on platforms like Instagram, Facebook, TikTok, YouTube, X, and Discord. Simply upload a photo or create from text, and the AI produces tailor-made visuals that reflect personal style.

Edit and Enhance Images with Integrated AI Tools

The platform includes a suite of intelligent editing tools such as background removal, object replacement, and AI-based image enhancement. With support for advanced models like Flux AI, users can refine their generated visuals with greater precision. These built-in features eliminate the need for switching between different applications, creating a seamless workflow for both beginners and professionals.

A Complete AI Photo Editing and Design Suite

As an all-in-one solution, Fotor also offers a complete AI photo editor for adjusting colors, applying effects, cropping, resizing, and customizing designs. Users can incorporate their AI-generated images into ready-made templates for posters, banners, business cards, podcast covers, social media graphics, and more. The platform enables quick customization for both personal and commercial projects.

Use AI Images for Blogs, Websites, and Book Covers

Writers and content creators can generate original illustrations for blog posts, web banners, or book covers. By entering a detailed prompt, the GPT image generator produces visuals that align with the narrative, color theme, and emotional tone of the content. This allows creators to enhance their storytelling with visually compelling artwork that strengthens viewer engagement.

Elevate Your Social Media with Impactful AI Visuals

For users aiming to grow their digital presence, the platform provides tools to produce attention-grabbing AI images suitable for social media posts. With high-definition quality and stylistic versatility, these visuals encourage higher engagement, shares, likes, and interactions across all major platforms.

A Powerful GPT Image Generator for Every Creative Need

From text-to-image conversion to prompt-free image transformation, Fotor’s GPT image generator is designed to meet the demands of modern creators. Whether you are developing branding materials, producing digital art, enhancing social media content, or exploring new artistic styles, the tool offers limitless creative potential with professional-level results.