Generative AI is rapidly becoming a core enterprise capability, and this report explores how businesses across industries are applying AI technologies in real-world scenarios to improve productivity, automate workflows, enhance customer experiences, and shape the future of organizational decision-making.

Generative Ai Use Cases In Business: A Comprehensive Enterprise Report

Generative AI use cases in business have moved from experimental pilots to mission‑critical systems that influence strategy, operations, and customer engagement. What was once perceived as a futuristic capability is now embedded across enterprise software, workflows, and decision‑making structures. Organizations are no longer asking whether artificial intelligence should be adopted, but how it can be applied responsibly, efficiently, and at scale.

This report examines how generative AI and related AI technologies are reshaping modern enterprises. It presents a restructured, professional analysis of enterprise AI adoption, industry‑specific applications, governance considerations, and the strategic implications for organizations navigating rapid technological change.

The Evolution of Artificial Intelligence in the Enterprise

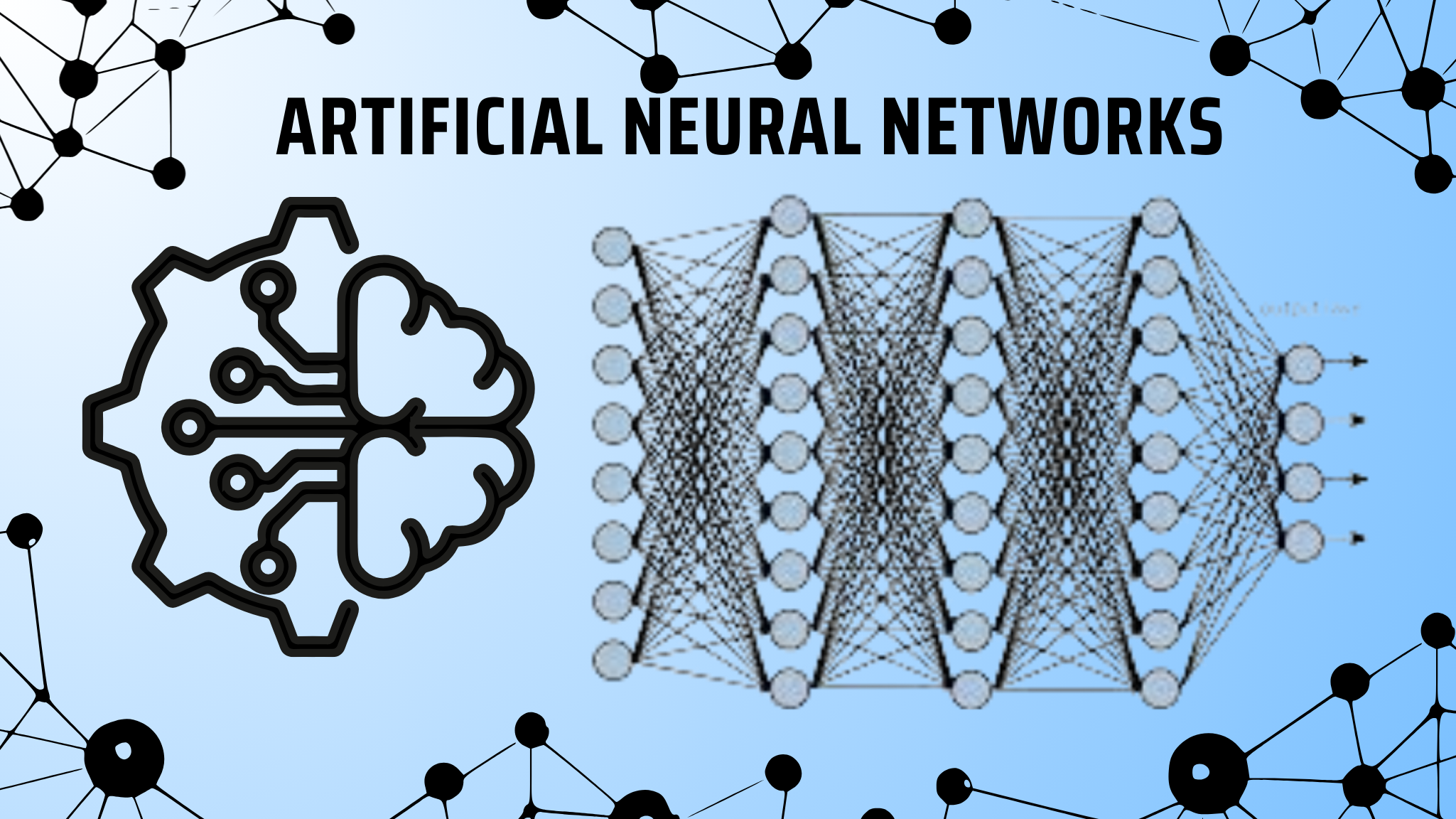

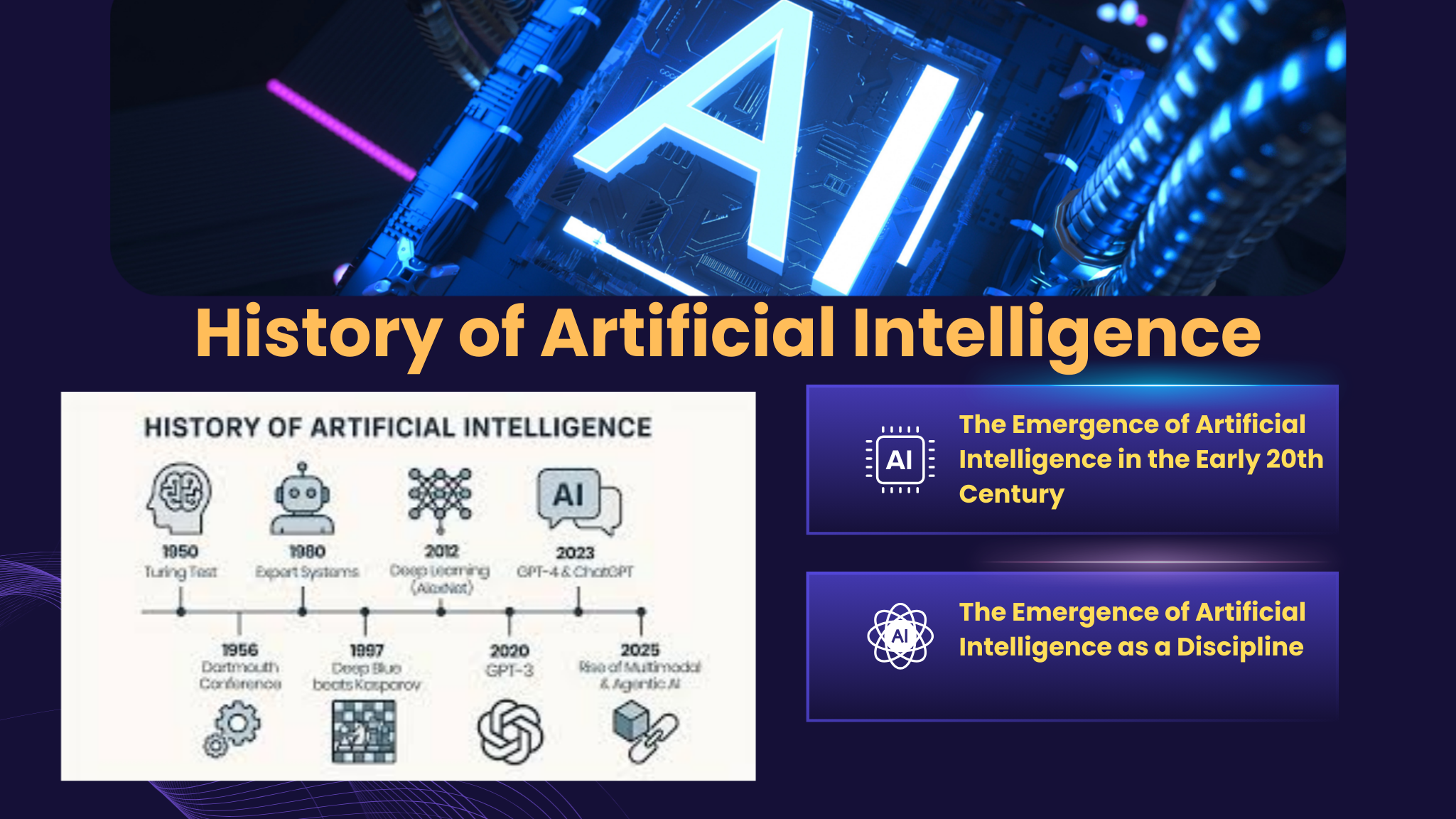

Artificial intelligence has evolved through several distinct phases. Early AI systems focused on rule‑based automation, followed by statistical machine learning models capable of identifying patterns in structured data. The current phase is defined by generative AI and large language models, which can understand context, generate human‑like content, and interact conversationally across multiple modalities.

Large language models such as OpenAI GPT‑4 have accelerated enterprise interest by enabling tasks that previously required human judgment. These models can draft documents, summarize reports, generate code, analyze customer feedback, and power AI assistants that operate across organizational systems. Combined with advances in computer vision and speech processing, generative AI has become a foundational layer of modern enterprise technology stacks.

Unlike earlier automation tools, generative AI does not simply execute predefined rules. It learns from vast datasets, adapts to new information, and supports knowledge‑intensive work. This shift explains why AI adoption has expanded beyond IT departments into marketing, finance, healthcare, manufacturing, and executive leadership.

Strategic Drivers Behind Generative AI Adoption

Several forces are driving organizations to invest in generative AI use cases in business. Productivity pressure is one of the most significant. Enterprises face rising costs, talent shortages, and increasing competition, creating demand for AI‑driven automation that enhances efficiency without compromising quality.

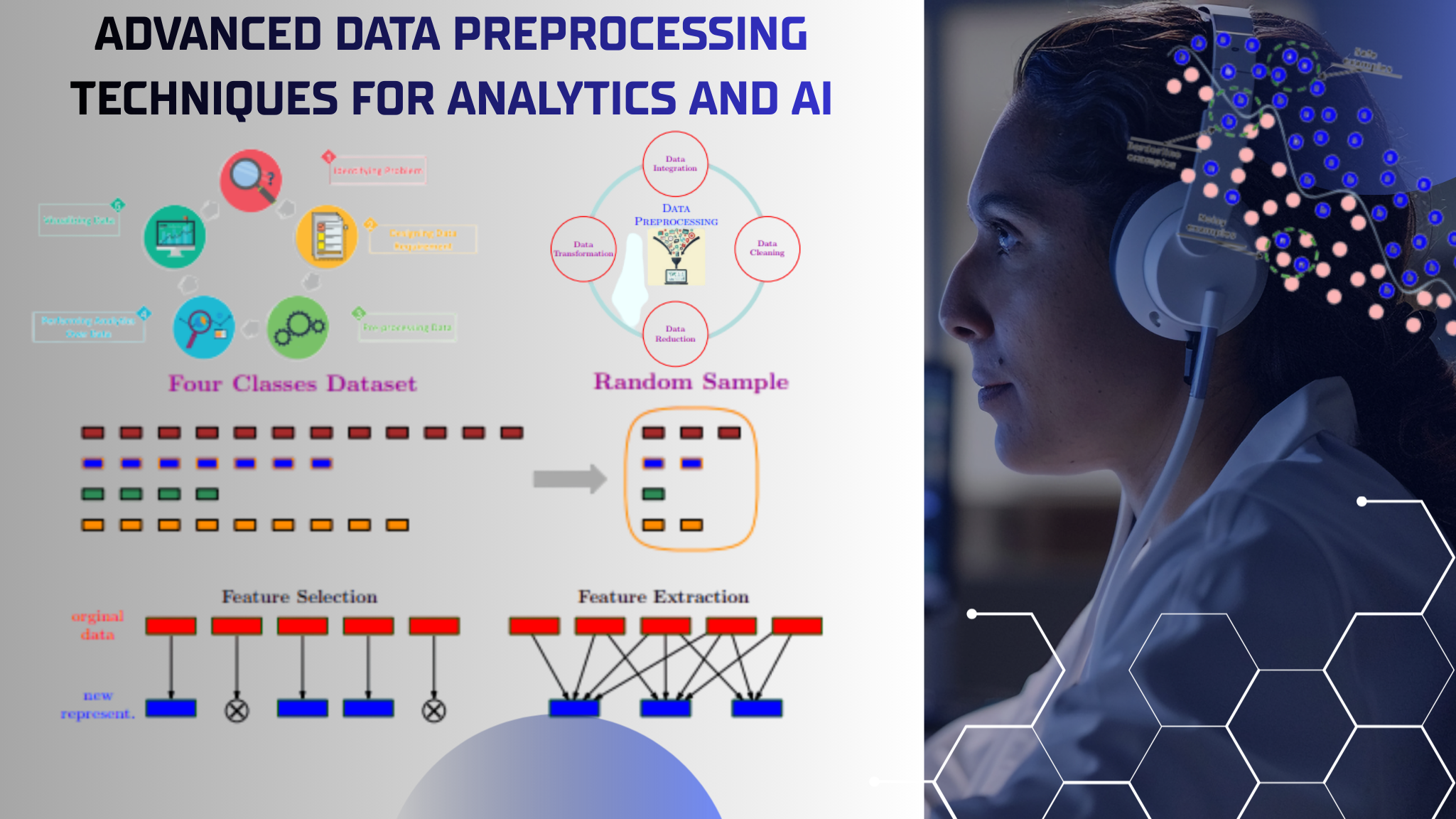

Another driver is data complexity. Companies generate massive volumes of unstructured data through emails, documents, images, videos, and conversations. Traditional analytics tools struggle to extract value from this information, while generative AI excels at interpretation, summarization, and contextual reasoning.

Customer expectations have also changed. Personalized experiences, real‑time support, and consistent engagement across channels are now standard requirements. AI‑powered chatbots, recommendation engines, and personalization systems allow organizations to meet these expectations at scale.

Finally, enterprise software vendors have accelerated adoption by embedding AI capabilities directly into their platforms. Tools such as Salesforce Einstein Copilot, SAP Joule, and Dropbox AI reduce the technical barrier to entry, making AI accessible to non‑technical users across the organization.

Enterprise AI Applications Across Core Business Functions

Generative AI use cases in business span nearly every enterprise function. In operations, AI‑powered workflows automate routine processes such as document handling, reporting, and compliance checks. AI summarization tools enable executives to review lengthy materials quickly, improving decision velocity.

In human resources, AI assistants support recruitment by screening resumes, generating job descriptions, and analyzing candidate data. Learning and development teams use AI content generation to create personalized training materials tailored to employee roles and skill levels.

Finance departments apply AI models to forecast revenue, detect anomalies, and automate financial reporting. While human oversight remains essential, AI enhances accuracy and reduces manual effort in data‑intensive tasks.

Legal and compliance teams benefit from AI transcription and document analysis tools that review contracts, flag risks, and support regulatory monitoring. These applications demonstrate how generative AI can augment specialized professional roles rather than replace them.

Generative AI in Marketing, Advertising, and Media

Marketing and advertising were among the earliest adopters of generative AI, and they remain areas of rapid innovation. AI‑generated content is now widely used to draft marketing copy, social media posts, product descriptions, and campaign concepts. This allows teams to scale output while maintaining brand consistency.

AI personalization tools analyze customer behavior to deliver tailored messages across digital channels. In advertising, generative models assist with creative testing by producing multiple variations of visuals and copy, enabling data‑driven optimization.

Media and entertainment platforms have also embraced AI. YouTube AI features enhance content discovery and moderation, while Spotify AI DJ demonstrates how AI‑powered recommendations can create dynamic, personalized listening experiences. These use cases highlight the role of generative AI in shaping audience engagement and content consumption.

AI Use Cases in Healthcare, Biotechnology, and Pharmaceuticals

Healthcare represents one of the most impactful areas for enterprise generative AI applications. AI in healthcare supports clinical documentation, medical transcription, and patient communication, reducing administrative burden on clinicians.

In biotechnology and pharmaceuticals, generative AI accelerates research and development by analyzing scientific literature, predicting molecular structures, and supporting drug discovery workflows. Machine learning models identify patterns in complex biological data that would be difficult for humans to detect manually.

AI governance and ethical oversight are particularly critical in these sectors. Responsible AI practices, transparency, and regulatory compliance are essential to ensure patient safety and trust. As adoption grows, healthcare organizations must balance innovation with accountability.

Industrial and Robotics Applications of AI Technology

Beyond knowledge work, AI technology is transforming physical industries through robotics and automation. AI in robotics enables machines to perceive their environment, adapt to changing conditions, and perform complex tasks with precision.

Boston Dynamics robots exemplify how computer vision and machine learning support mobility, inspection, and logistics applications. In manufacturing and warehousing, AI‑driven automation improves efficiency, safety, and scalability.

The automotive sector has also adopted AI in specialized domains such as automotive racing, where machine learning models analyze performance data and optimize strategies in real time. These applications demonstrate the versatility of AI across both digital and physical environments.

AI in Cloud Computing, E‑Commerce, and Digital Platforms

Cloud computing has played a critical role in enabling enterprise AI adoption. Scalable infrastructure allows organizations to deploy large language models and AI tools without maintaining complex on‑premise systems. Nvidia AI technologies power many of these platforms by providing the computational capabilities required for training and inference.

In e‑commerce, AI‑powered recommendations, dynamic pricing models, and customer support chatbots enhance user experience and drive revenue growth. AI personalization increases conversion rates by aligning products and messaging with individual preferences.

Digital platforms increasingly treat AI as a core service rather than an add‑on feature. This integration reflects a broader shift toward AI‑native enterprise software architectures.

AI Assistants and the Future of Knowledge Work

AI assistants represent one of the most visible manifestations of generative AI in business. Tools such as ChatGPT, enterprise copilots, and virtual assistants support employees by answering questions, generating drafts, and coordinating tasks across applications.

These systems reduce cognitive load and enable workers to focus on higher‑value activities. Rather than replacing human expertise, AI assistants act as collaborative partners that enhance productivity and creativity.

As AI assistants become more context‑aware and integrated, organizations will need to redefine workflows, performance metrics, and skill requirements. Change management and training will be essential to realize long‑term value.

Ethical Considerations and AI Governance

The rapid expansion of generative AI use cases in business raises important ethical and governance questions. AI misuse, data privacy, and algorithmic bias pose significant risks if not addressed proactively.

Responsible AI frameworks emphasize transparency, accountability, and human oversight. Organizations must establish clear AI policies that define acceptable use, data handling practices, and escalation procedures for errors or unintended outcomes.

AI governance is not solely a technical challenge. It requires cross‑functional collaboration among legal, compliance, IT, and business leaders. As regulatory scrutiny increases globally, enterprises that invest early in governance structures will be better positioned to adapt.

Measuring Business Value and ROI from AI Adoption

Demonstrating return on investment remains a priority for enterprise leaders. Successful AI adoption depends on aligning use cases with strategic objectives and measurable outcomes.

Organizations should evaluate AI initiatives based on productivity gains, cost reduction, revenue impact, and customer satisfaction. Pilot programs, iterative deployment, and continuous monitoring help mitigate risk and ensure scalability.

Importantly, value creation often extends beyond immediate financial metrics. Enhanced decision quality, faster innovation cycles, and improved employee experience contribute to long‑term competitive advantage.

The Road Ahead for Generative AI in Business

Generative AI is still in an early stage of enterprise maturity. As models become more efficient, multimodal, and domain‑specific, their impact will continue to expand. Integration with existing systems, improved explainability, and stronger governance will shape the next phase of adoption.

Future enterprise AI applications are likely to blur the boundary between human and machine work. Organizations that invest in skills development, ethical frameworks, and strategic alignment will be best positioned to benefit from this transformation.

Rather than viewing generative AI as a standalone technology, enterprises should treat it as an evolving capability embedded across processes, platforms, and culture. This perspective enables sustainable innovation and responsible growth.

Conclusion:

Generative AI use cases in business illustrate a fundamental shift in how organizations operate, compete, and create value. From marketing and healthcare to robotics and cloud computing, AI technologies are redefining enterprise capabilities.

The most successful organizations approach AI adoption with clarity, discipline, and responsibility. By focusing on real‑world applications, governance, and human collaboration, enterprises can harness the full potential of generative AI while managing its risks.

As AI continues to evolve, its role in business will move from augmentation to strategic partnership. Enterprises that understand this transition today will shape the economic and technological landscape of tomorrow.

FAQs:

What makes generative AI different from traditional AI systems in business?

Generative AI differs from traditional AI by its ability to create new content, insights, and responses rather than only analyzing existing data. In business environments, this enables tasks such as drafting documents, generating marketing content, summarizing complex reports, and supporting decision-making through conversational AI assistants.Which business functions benefit the most from generative AI adoption?

Functions that rely heavily on information processing see the greatest impact, including marketing, customer support, human resources, finance, and operations. Generative AI improves efficiency by automating repetitive work while also supporting creative and strategic activities that previously required significant human effort.How are enterprises using generative AI to improve productivity?

Enterprises use generative AI to streamline workflows, reduce manual documentation, automate reporting, and assist employees with real-time insights. AI-powered tools help teams complete tasks faster, minimize errors, and focus on higher-value work that drives business outcomes.Is generative AI suitable for regulated industries like healthcare and finance?

Yes, generative AI can be applied in regulated industries when supported by strong governance, transparency, and human oversight. Organizations in healthcare and finance use AI for documentation, analysis, and decision support while ensuring compliance with data protection and regulatory standards.What role do AI assistants play in modern enterprise software?

AI assistants act as intelligent interfaces between users and enterprise systems. They help employees retrieve information, generate content, coordinate tasks, and interact with complex software platforms using natural language, reducing friction and improving usability.What are the main risks businesses should consider when deploying generative AI?

Key risks include data privacy concerns, inaccurate outputs, bias in AI-generated content, and potential misuse. Addressing these risks requires clear AI policies, ongoing monitoring, ethical guidelines, and a structured approach to AI governance.How can organizations measure the success of generative AI initiatives?

Success is measured by evaluating productivity gains, cost reductions, quality improvements, customer satisfaction, and employee adoption. Many organizations also assess long-term value, such as faster innovation cycles and improved decision-making, rather than relying solely on short-term financial metrics.