This in-depth guide explores how data preprocessing in big data analytics transforms raw, complex data into reliable, high-quality inputs through cleaning, integration, transformation, and feature engineering to enable accurate analytics and machine learning outcomes.

Data Preprocessing in Big Data Analytics: Foundations, Techniques, and Strategic Importance

Introduction:

In the modern data-driven economy, organizations rely on massive volumes of structured and unstructured data to fuel analytics, artificial intelligence, and machine learning initiatives. However, raw data in its original form is rarely suitable for analysis or modeling. Before meaningful insights can be derived, data must undergo a systematic and carefully engineered preparation process. This process is known as data preprocessing in big data analytics.

Data preprocessing serves as the bridge between raw data collection and advanced analytical modeling. It ensures accuracy, consistency, relevance, and usability of data across large-scale systems. Without it, even the most sophisticated algorithms produce unreliable results. As data sources become increasingly complex and diverse, preprocessing has evolved into a critical discipline within big data analytics and machine learning workflows.

This comprehensive guide explores the conceptual foundations, methods, challenges, and best practices of data preprocessing, offering a detailed understanding of why it is indispensable for modern analytics.

Understanding Data Preprocessing in Big Data Analytics

What is data preprocessing in big data analytics? At its core, data preprocessing refers to a collection of processes applied to raw data to convert it into a clean, structured, and analysis-ready format. In big data environments, this process must scale across high volume, velocity, and variety while maintaining data integrity.

Unlike traditional data preparation, big data preprocessing often deals with distributed systems, streaming data, heterogeneous formats, and incomplete information. It encompasses activities such as data collection, cleaning, transformation, integration, reduction, and validation, all performed prior to analytics or machine learning model training.

Effective preprocessing directly impacts model accuracy, computational efficiency, and decision-making reliability.

Data Collection and Data Provenance

The preprocessing lifecycle begins with data collection. Data may originate from transactional systems, sensors, social platforms, enterprise databases, or third-party APIs. Each source introduces its own structure, format, and quality constraints.

An often-overlooked aspect of data collection is data provenance. Data provenance tracks the origin, movement, and transformation history of data across systems. Maintaining provenance information ensures transparency, auditability, and regulatory compliance, especially in enterprise analytics and regulated industries.

Closely related is metadata in data processing. Metadata describes the characteristics of data, including schema, timestamps, ownership, and processing rules. Proper metadata management supports automation, governance, and quality control throughout preprocessing pipelines.

Data Quality Issues in Large-Scale Analytics

Data quality issues represent one of the most significant obstacles in big data analytics. These issues arise due to inconsistent formats, human error, system failures, and incomplete data generation.

Common data quality challenges include:

- Missing or incomplete values

- Duplicate records

- Inconsistent units or categories

- Noise and outliers

- Data drift over time

Addressing these issues early in the preprocessing phase is essential to prevent bias, inaccurate predictions, and model instability.

Handling Missing Data Effectively

Missing data handling is a foundational component of data cleaning. In big data contexts, missing values can occur at scale and for multiple reasons, such as sensor malfunctions, user non-response, or system integration errors.

Statistically, missing data is categorized into MCAR, MAR, and MNAR. Data missing completely at random has no dependency on observed or unobserved variables. Missing at random depends on observed variables, while missing not at random depends on unobserved factors.

Choosing the appropriate data cleaning techniques for missing values depends on the nature and volume of missingness. Common approaches include deletion, statistical imputation, and model-based imputation.

Data imputation techniques range from simple mean or median replacement to advanced predictive methods that leverage correlations among variables. The goal is to preserve data distribution and minimize information loss.

Noise, Outliers, and Anomaly Management

Noise and outliers can distort statistical analysis and machine learning models if left unaddressed. Noise refers to random errors or irrelevant data, while outliers are extreme values that deviate significantly from the norm.

How to handle outliers in data analytics depends on context. Some outliers represent errors, while others may carry valuable insights, such as fraud detection or rare events.

Outlier detection methods include statistical techniques, distance-based methods, and model-driven approaches. Z-score normalization is frequently used to identify outliers based on standard deviation thresholds. Visualization techniques, such as box plots, are also widely applied during exploratory data analysis.

Exploratory Data Analysis as a Preprocessing Pillar

Exploratory Data Analysis, commonly known as EDA, plays a strategic role in data preprocessing. It enables analysts to understand data distributions, relationships, and anomalies before applying transformations or models.

EDA techniques include summary statistics, correlation analysis, distribution plots, and dimensionality inspection. These methods inform decisions related to feature selection, transformation strategies, and scaling techniques.

In big data environments, EDA often relies on sampling techniques to make analysis computationally feasible without compromising representativeness.

Data Cleaning Techniques for Consistency and Accuracy

Data cleaning techniques extend beyond missing values and outliers. They also address formatting inconsistencies, invalid entries, and logical errors.

Standard cleaning tasks include:

- Data deduplication to remove redundant records

- Validation of categorical values

- Standardization of units and formats

- Correction of erroneous data entries

Data deduplication is especially important when integrating multiple data sources. Duplicate records inflate dataset size, skew analytics, and increase processing costs.

Data Integration Across Distributed Sources

Data integration combines data from multiple heterogeneous sources into a unified view. In big data analytics, this process is complicated by differences in schemas, formats, and semantics.

Successful integration requires schema alignment, entity resolution, and conflict resolution. Metadata and data provenance play critical roles in tracking integrated data flows and maintaining consistency.

Integrated datasets enable holistic analysis, allowing organizations to derive insights that would not be possible from isolated data sources.

Data Transformation Methods and Their Role

Data transformation converts data into suitable formats or structures for analysis. Data transformation techniques with examples include aggregation, encoding, normalization, and mathematical transformations.

Logarithmic transformation and Box-Cox transformation are frequently used to stabilize variance and normalize skewed distributions. These transformations improve model performance, particularly in regression and statistical learning contexts.

Transformation decisions should be guided by analytical objectives, domain knowledge, and exploratory analysis results.

Encoding Categorical Data for Machine Learning

Machine learning algorithms typically require numerical input, making data encoding techniques essential during preprocessing.

One-hot encoding converts categorical variables into binary indicator variables, preserving category independence. Label encoding assigns numerical labels to categories, which is suitable for ordinal data but may introduce unintended relationships for nominal variables. Target encoding replaces categories with statistical summaries derived from the target variable, offering efficiency in high-cardinality scenarios.

Selecting the appropriate encoding method depends on data size, model type, and feature characteristics.

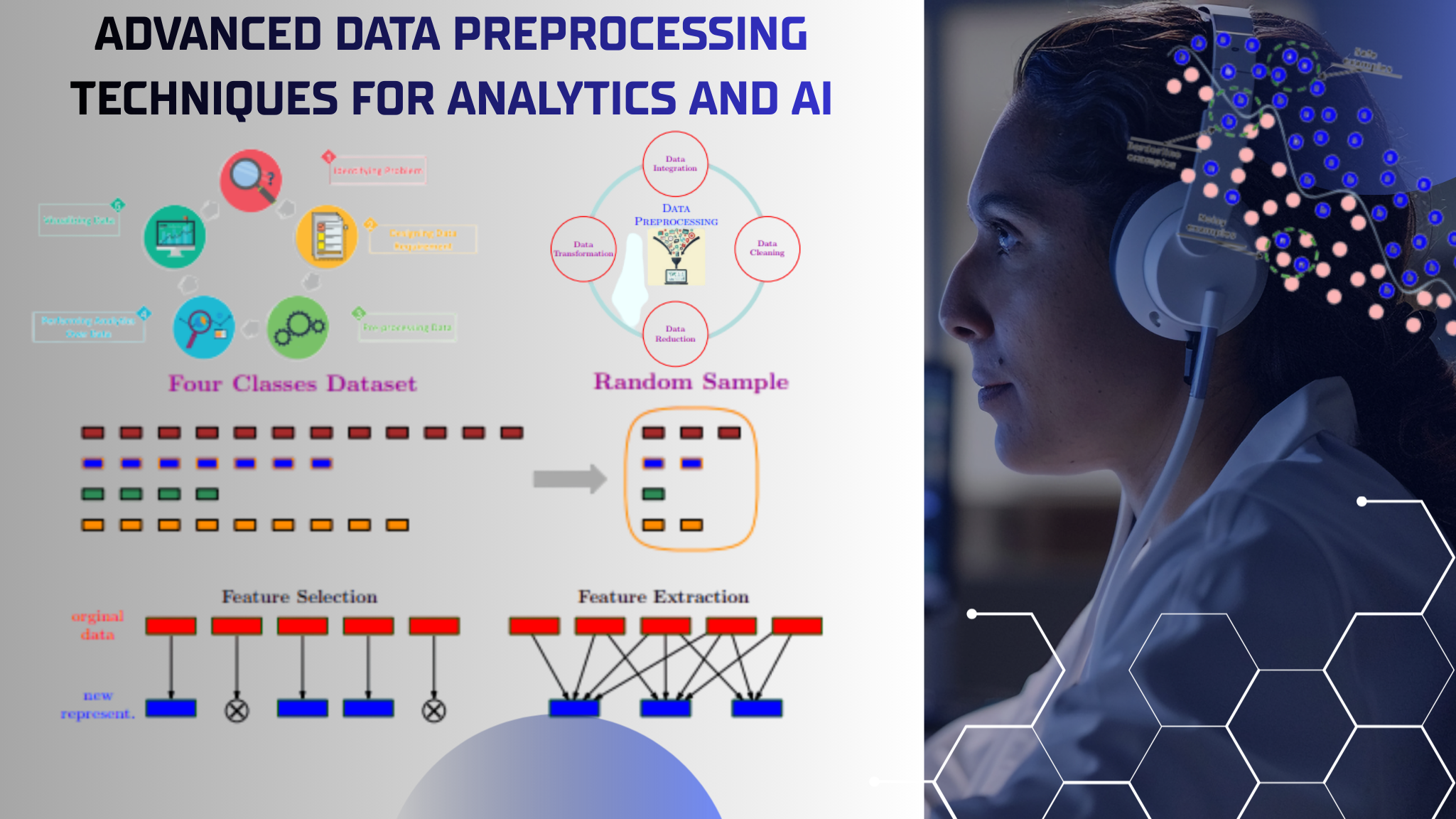

Feature Selection and Feature Extraction

Feature engineering lies at the heart of data preprocessing. Feature selection focuses on identifying the most relevant variables, while feature extraction creates new variables by transforming existing ones.

Feature selection vs feature extraction represents a strategic choice. Selection reduces noise and improves interpretability, whereas extraction captures complex patterns and relationships.

In big data analytics, feature selection helps mitigate class imbalance in data and reduces computational overhead, while feature extraction supports advanced modeling capabilities.

Dimensionality Reduction and the Curse of Dimensionality

As datasets grow in size and complexity, dimensionality becomes a major challenge. The curse of dimensionality refers to the exponential increase in data sparsity and computational cost as feature counts rise.

Dimensionality reduction techniques address this issue by projecting data into lower-dimensional spaces while preserving essential information. Principal Component Analysis (PCA) is one of the most widely used methods, transforming correlated variables into orthogonal components ranked by variance.

Dimensionality reduction improves model efficiency, reduces overfitting, and enhances visualization.

Data Reduction Strategies in Big Data

Data reduction complements dimensionality reduction by decreasing dataset size without sacrificing analytical value. Techniques include aggregation, sampling, and compression.

Data sampling techniques are particularly valuable for exploratory analysis and rapid prototyping. They allow analysts to work with manageable subsets while retaining representative characteristics of the full dataset.

Effective data reduction reduces storage costs and accelerates processing in large-scale analytics platforms.

Scaling and Standardization in Machine Learning

Scaling and normalization in machine learning are essential when algorithms are sensitive to feature magnitudes. Data scaling ensures that variables contribute proportionally to distance-based or gradient-based models.

Min-max scaling transforms values into a fixed range, typically between zero and one. Z-score normalization standardizes data based on mean and standard deviation, centering features around zero.

Data normalization enhances model convergence, stability, and performance across diverse algorithms.

Addressing Class Imbalance in Preprocessing

Class imbalance in data occurs when certain outcome categories are significantly underrepresented. This imbalance can bias predictive models and degrade performance.

Preprocessing strategies include resampling techniques, synthetic data generation, and algorithmic adjustments. Addressing imbalance during preprocessing ensures fair and reliable model outcomes.

Importance of Data Preprocessing in Machine Learning

The importance of data preprocessing in machine learning cannot be overstated. Preprocessing directly influences model accuracy, generalization, and interpretability.

Well-preprocessed data reduces noise, highlights meaningful patterns, and enables algorithms to learn effectively. Conversely, poorly prepared data undermines even the most advanced models.

In enterprise environments, preprocessing also supports compliance, scalability, and operational efficiency.

Governance, Metadata, and Enterprise Readiness

As organizations scale analytics initiatives, governance becomes integral to preprocessing. Metadata in data processing enables lineage tracking, version control, and policy enforcement.

Data provenance supports trust, accountability, and reproducibility in analytical workflows. Together, these elements ensure that preprocessing pipelines meet enterprise-grade standards.

Conclusion:

Data preprocessing in big data analytics is far more than a preliminary technical step. It is a strategic discipline that determines the success or failure of analytics, machine learning, and AI initiatives. From data collection and quality assurance to transformation, reduction, and feature engineering, preprocessing shapes the analytical foundation upon which insights are built.

By addressing data quality issues, handling missing data intelligently, managing outliers, and applying appropriate scaling and transformation techniques, organizations unlock the full potential of their data assets. As big data continues to grow in scale and complexity, robust preprocessing frameworks will remain essential for sustainable, trustworthy, and high-impact analytics.

In an era where data-driven decisions define competitive advantage, mastering data preprocessing is not optional—it is imperative.

FAQs:

- Why is data preprocessing critical in big data analytics?

Data preprocessing ensures that large and complex datasets are accurate, consistent, and suitable for analysis, directly influencing the reliability of insights and machine learning model performance. - How does data preprocessing differ in big data environments compared to traditional analytics?

Big data preprocessing must handle high volume, velocity, and variety, often using distributed systems and automated pipelines to manage diverse formats and real-time data streams. - What are the most common data quality issues addressed during preprocessing?

Typical issues include missing values, duplicate records, inconsistent formats, noisy data, and extreme outliers that can distort analytical outcomes. - When should feature selection be preferred over feature extraction?

Feature selection is ideal when interpretability and computational efficiency are priorities, while feature extraction is better suited for capturing complex patterns in high-dimensional data. - How do scaling and normalization affect machine learning models?

Scaling and normalization ensure that features contribute proportionally during model training, improving convergence speed and accuracy, especially for distance-based algorithms. - What role does metadata play in data preprocessing?

Metadata provides context about data structure, origin, and transformations, supporting governance, traceability, and consistent preprocessing across analytical workflows. - Can improper preprocessing negatively impact business decisions?

Yes, inadequate preprocessing can introduce bias, reduce model accuracy, and lead to misleading insights, ultimately affecting strategic and operational decision-making.