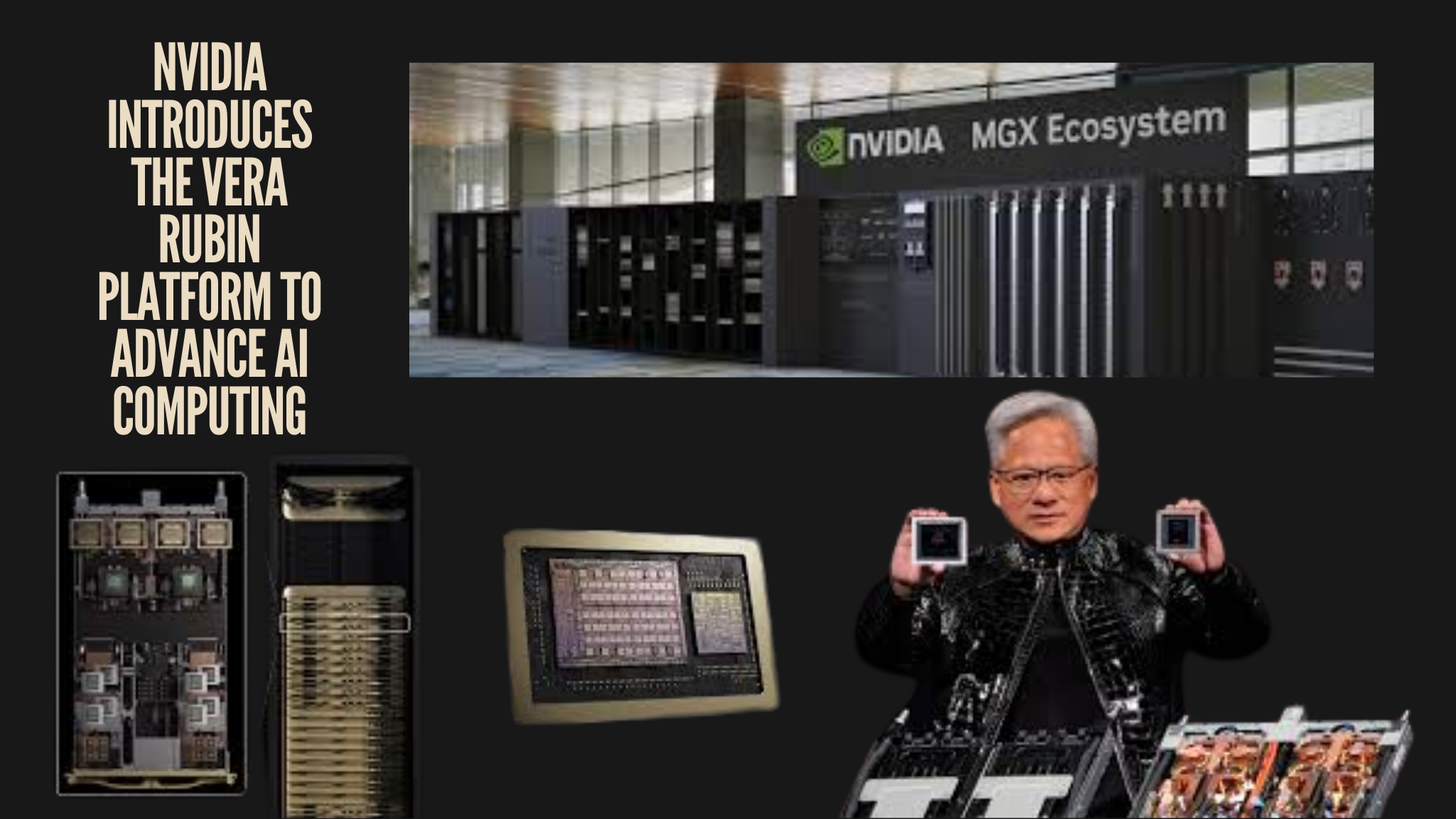

This report explores Nvidia’s early unveiling of the Vera Rubin AI computing platform at CES 2026, highlighting how its new architecture aims to deliver higher training performance, improved efficiency, and secure, rack-scale AI infrastructure for next-generation data centers.

Overview of the Announcement

At CES 2026, Nvidia revealed its next-generation AI computing platform, Vera Rubin, marking an important milestone in the company’s data center and artificial intelligence roadmap. The launch comes after a period of strong growth driven by widespread adoption of the Blackwell and Blackwell Ultra GPU families, which set new standards for AI performance across cloud and enterprise environments.

A Platform-Centric AI Architecture

Vera Rubin is designed as a fully integrated AI supercomputing platform rather than a single processor upgrade. The architecture combines multiple specialized components, including the Vera CPU, Rubin GPU, sixth-generation NVLink interconnect, ConnectX-9 networking, BlueField-4 data processing, and Spectrum-X high-speed switching. Together, these elements form a rack-scale system built to handle complex AI workloads efficiently and securely.

Performance and Efficiency Gains

According to Nvidia, the Rubin GPU delivers up to five times more AI training compute than its predecessor. The platform is engineered to train large mixture of experts models using significantly fewer GPUs, while also lowering overall token and energy costs. These improvements are aimed at addressing rising concerns around the economics and scalability of large-scale AI development.

Availability and Industry Impact

Nvidia expects partners to begin offering products and services based on the Vera Rubin platform in the second half of 2026. With its emphasis on performance, efficiency, and trusted computing, Vera Rubin is positioned to influence the next phase of AI infrastructure deployment across data centers worldwide.